Automated driving is not easy. At all. In fact, it’s pretty astounding that we’ve come as far as we have. The underlying tech behind all automated driving systems, even Level 2 supervised ones, is incredibly impressive. It’s the sort of thing that seemed nearly impossible until about 20 years ago, when Stanford University’s very modified VW Touareg won the Grand Challenge and drove 132 miles through the Mojave Desert all by itself, at a speed of around 19 mph. Now Waymo has fleets of robotaxis driving the busy streets of major cities every single day, and, for the most part, they do a pretty good job. Sure, there have been incidents and accidents, hints and allegations, but overall? It generally works. That’s part of why I’m so surprised about an incident with a Waymo passing a stopped school bus that has triggered an investigation by the National Highway Traffic Safety Administration (NHTSA).

Here’s what happened, based on video of the incident captured by a witness. On September 25 in Atlanta, this Waymo automated vehicle was seen ignoring the many flashing lights and pop-out stop sign of a school bus and passing it instead of stopping. This is, of course, a severe traffic infraction, with pretty harsh penalties in most states, because the reason a school bus stops and turns on that mini-carnival of lights is to ensure the safety of the kids entering or exiting the bus.

Those lights are so numerous and obvious because they mean kids are around, kids nobody wants to hit with a car. This is a big deal. Here’s the video of what happened:

Here’s a news report showing the same clip and including commentary from local officials:

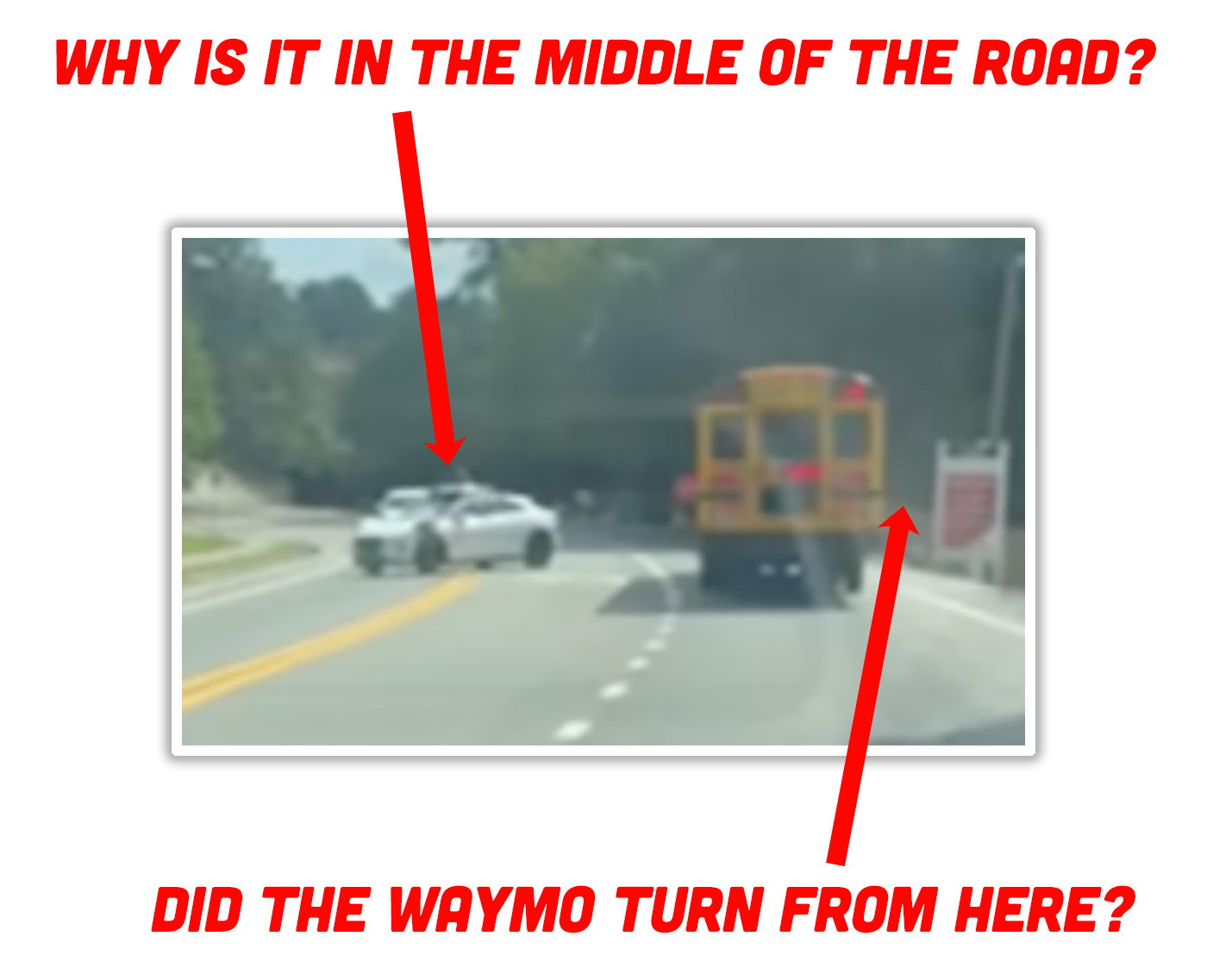

Let’s just look at what the Waymo did to try and make sense of it all; here are some stills from the video:

So, we see the Waymo’s starting position in front of the bus, sorta perpendicular, in the middle of the street, crossing the double yellow line. We’ll come back to that. You can see the bus had all of its warning lights blinking, the pop-out stop sign deployed, everything, and then we see the Waymo just cruising past it.

I suspect the Waymo must have made a turn in front of the bus to end up in the middle of the road like that? The video doesn’t include anything from before this point, so I can’t be certain, but I also can’t figure out how it would end up in this position otherwise. And, it hardly matters, because at this point the damage is already done: that turn should never have been executed in front of a stopped school bus with all its warning lights flashing.

The Waymo seems to be at least partially aware something is amiss, because it’s making that turn quite slowly, though it notably does not fully stop at any point. The narrator of the video states, “the Waymo just drove around the school bus,” which seems to support it made a turn in front of the bus, which is, in this case, quite illegal.

Now, here’s what I really don’t understand: how can this happen? Not “this” as in an AV making an error – we know there’s a myriad of ways that can happen– but this specifically, a school bus-related issue. I can’t think of an element that regularly appears on roads more predictable than a school bus. They have essentially the same overall look across the country – same general scale, same general markings, same general colors, same general shape, everything. They have the same, predictable behaviors, the same warning lights and signs, the same cautions need to be taken when around them, they tend to travel in predictable, regular routes at predictable regular speeds – every aspect of school buses should be ideal for automated driving.

Really, there’s almost no element of automated driving that is potentially more predictable and understandable than school bus identification and behavior. I know when you’re dealing with messy reality, nothing is easy, but in the spectrum of automated driving difficulty, school buses feel like an absolute gift. The Waymo should have seen and identified that school bus immediately, and that should have triggered some school bus-specific subroutine that told it to, you know, see if the flashing lights and stop sign are active, and if so, stop. And stay stopped until the bus’s lights are off and it’s safely away. This is one of those cases where a potential for false triggers is worth it, because the potential risks are so great.

Waymo did issue a statement about the incident, though it doesn’t really say all that much:

“The trust and safety of the communities we serve is our top priority. We continuously refine our system’s performance to navigate complex scenarios and are looking into this further.”

Waymo also told Reuters that they have

“…already developed and implemented improvements related to stopping for school buses and will land additional software updates in our next software release.”

Referring to the specific incident, Reuters also reported that Waymo noted the robotaxi

“…approached the school bus from an angle where the flashing lights and stop sign were not visible and drove slowly around the front of the bus before driving past it, keeping a safe distance from children.”

While I have no doubt this is possible, it does open up a lot of questions. Did the Waymo vehicle identify the school bus for what it was at all? If there are potential angles where a school bus’ warning lights can’t be readily seen, should there be other methods considered? Audio tones, or even some sort of short-range RF type of warning specific to automated vehicles to help them identify when a school bus may have children entering or exiting? Again, school buses should be some of the most obvious and understandable regularly encountered vehicles on public roads; there’s no excuse to not be able to deal with them in a robust and predictable manner.

I’m sure Waymo is happy to have NTSA joining them in this investigation, right?

Loved the Paul Simon reference up there.

Sorry. Apprently mis-responded on another article. Carry on.

I suspect that from the angle that the Waymo encounters the bus only the flashing lights on the right front corner were visible. The little flip out stop sign on the left side was certainly not visible, and any text reading “school bus” or the like was at an angle rendering it illegible.

Of course a human driver would know it’s a school bus, except for all the big yellow buses in NYC, and would know the difference between the kids getting off the bus vs the bus is just stopped, and all the variations in equipment in various school districts.

So, the Waymo is at the intersection, and apparently something big on the left is signaling for a right turn and is stopped.

Waymo waits

Nothing happens

Waymo waits

Nothing happens

Maybe big thing can’t turn because Waymo is in the way?

Waymo moves a little

Everyone is stopped.

Is Waymo in the way?

Waymo moves a little

Everyone is stopped.

Is Waymo in the way?

This can continue indefinitely or Waymo can just stop indefinitely.

Waymo has gotten in trouble for stopping indefinitely when confused by emergency vehicles with flashing lights and probably has a “get out of the way” behavior programed in, so maybe that is a factor here.

This sparked an interesting question at work, what has the right of way, a School Bus or an Ambulance?

Turns out Police/Fire/Ambulance have right of way but human discretion generally leaves the school bus with right of way as, you know, tiny humanlings on the road.

But in 2055 when the Waymo ambulance is driving me to the hospital after the Optiumus’s toss me in the back for having too many bratwursts, I’m now concerned it won’t in fact stop, oh well, not like my insurance will cover it either way.

1 – Mail truck (Federal vehicle)

2 – Emergency vehicle (Although they do have the responsibility to make sure the bus/student situation is safe before proceeding)

3 – Bus

4 – The rest of us.

The obvious question is what sort of logging do they have? It would be useful to have some idea of the logic leading to this, and some video from the car. This assumes Waymo is open and honest and doesn’t delete evidence like Tesla.

“I can’t think of an element that regularly appears on roads more predictable than a school bus.”

Michigan potholes?

So your next 500 Captcha prove-you’re-human challenge will have images of school buses.

Yes but can we call you Al?

Sounds like those kids could use a bodyguard, Betty!