Hybrid minivans are probably at the apex of people hauling right now. If you’re the kind of person who isn’t into crossovers, you can buy a minivan that’ll get over 30 mpg with the whole crew onboard! That’s honestly pretty awesome. Also neat is a feature that your hybrid might have that you might not know about.

Today, Matt Hardigree wrote about how he got 38 mpg with a Kia Carnival, which is fantastic. Olesam shared a neat hybrid trick:

Fun trick I learned on our Pacifica Hybrid that might apply to other manufacturers too: if you open the hood with the vehicle on (or start it with the hood open) the engine will turn on! It’s apparently a safety feature; I guess if the engine’s off you might let your guard down and stick your hand into a dangerous area (fan or belt) that might cause an, uh, uncomfortable interference issue were the engine to suddenly turn on.

I combed through the Chrysler Pacifica forums, and yep, it is a real feature! Through some quick searching, it seems like this is a pretty common hybrid car feature. The Chevy Volt has it, as does the Mazda CX-90. Conversely, there are some hybrids that shut down their engines when the hood is opened, like the Ford Maverick and the BMW X4 M40i. I think this warrants more research, because it’s fascinating!

David says he’s going to build his new WWII Jeep in his driveway. Readers feel his pain and offer an excellent tip. Canopysaurus:

My tip: Harbor Freight sells portable garages (read tent) with closable front and rear panels for about $170. One of these would easily hold an MB. I used a ShelterLogic storage tent myself big enough to hold a car. It’s still standing 13 years, three hurricanes, and countless thunderstorms later, not to mention relentless heat. So, I can vouch for the stability of these types of shelters. And when you’re done, collapse them down and dispose of them, or store for future use.

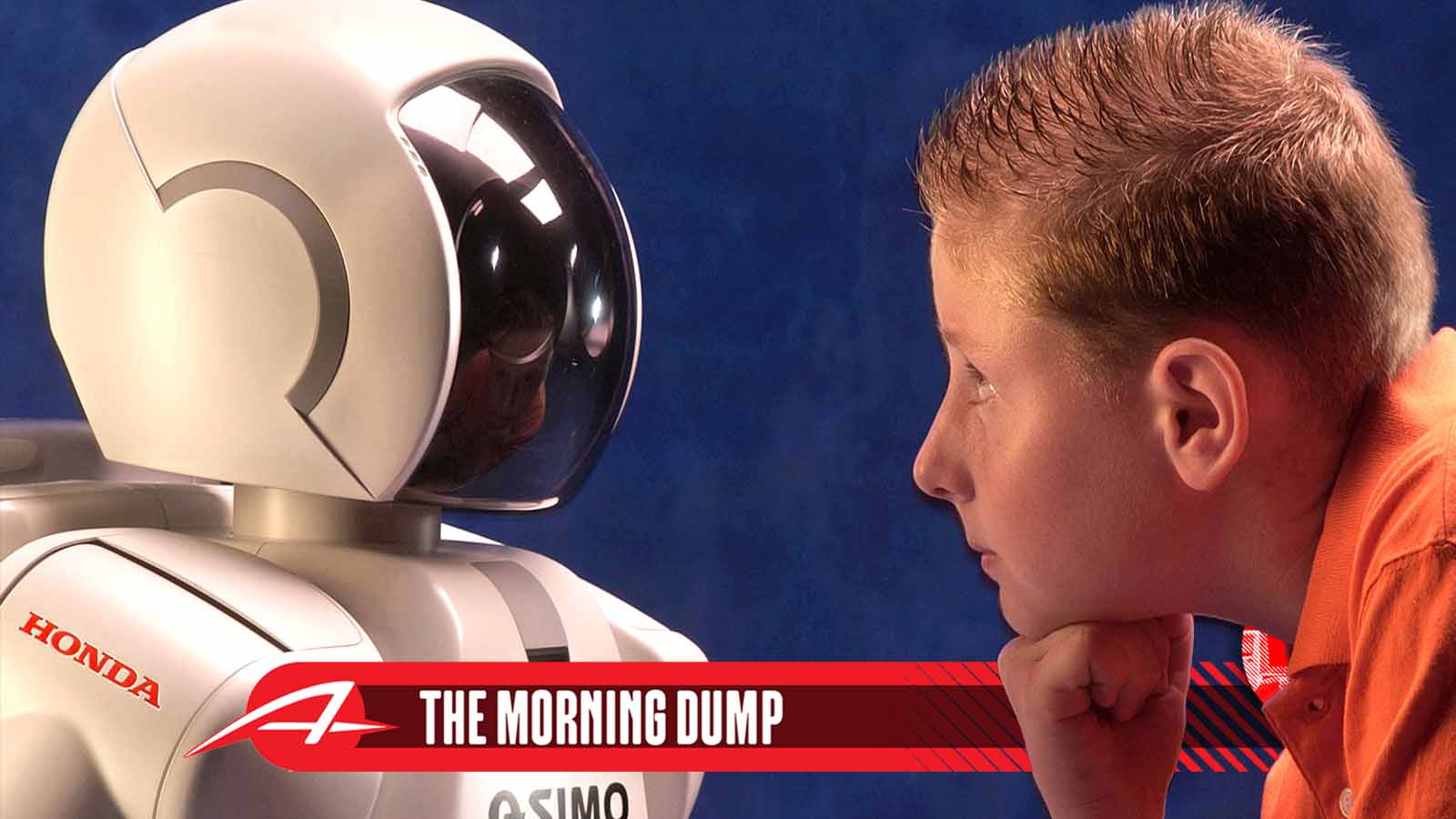

Finally, Matt wrote a Morning Dump about AI. TheDrunkenWrench has a great way to visualize it:

One of humanity’s biggest problems is that we LOVE to anthropomorphize things.

AI is just a “Yes, and” slot machine.

It doesn’t give you an answer to something, it gives you what it thinks an answer looks like.

If you ask for it to write code for a task, it just makes what it thinks it should look like.This is the slot machine part. You feed enough coins prompts into the digital one-armed Bandit and eventually you get a hit.

You can’t build reliable systems on machines that “learn”, cause at some point it’s just gonna feed ball bearings into the intake because it picked up a BS line somewhere about cleaning the cylinder walls.

Code is dumb and it needs clearly defined instruction sets that DO NOT CHANGE in order to have any level of reliability.

We didn’t teach a rock to think. We tricked everyone into thinking we taught a rock to think.

This will all blow up spectacularly.

Finally, G. K. told a hilarious anecdote about the Rover/Sterling 800 in Mark’s Shitbox Showdown:

Fun fact about the Rover/Sterling 800 series. As a show of faith, Honda allowed Rover to build the European-market Legend at its facility in Cowley, Oxfordshire, alongside the Rover version. However, Honda had no faith in the Brits’ ability to put the cars together correctly, and so set up a finishing line at its Swindon, Wiltshire plant to correct defects before sending the cars to dealers…and there were many defects.

Have a great evening, everyone!

(Top graphic: Chrysler)

My recently-purchased, well-used hybrid won’t allow the engine to run when the hood is open, which is neat and also sort of annoying when you are trying to identify a noise from under the hood (like I was just last night).

Also, I’ve always thought the “Honda fixing Rover’s cars” was an urban legend sort of thing. If true, I wonder how Rover actually felt about it and what they said publicly (if they said anything at all)?

If true, I assume Rover would have kept as quiet about it as possible. I can imagine Honda had a different idea of what constituted a ‘defect’ from British “that’ll do” Leyland.

I bet you are right, and I suspect that’s why I have always heard it was an urban legend. British Leyland will likely never admit to it, and Honda probably won’t either since I bet they think letting Rover build anything was a mistake.

If you open the hood during engine start stop on most cars it will also force a restart

When we had our Lexus Hybrid, pushing START with the accelerator depressed would force an engine start.

Another COTD? Keep treating me this way and I’ll brave the CAN/US border to come down to get your buses running.

Your point about AI is spot on, though. We anthropomorphize it but most LLMs are effectively a whole bunch of linear regressions stacked in a Jenga tower. It doesn’t give you an answer, it gives you an example of what an answer might look like. That’s why it generates academic papers with citations that never existed, because it’s just generating an example of what an academic paper could look like.

Exactly, and when you do it with code, your IDE doesn’t care who wrote it, it tells you IT DON’T WORK.

Which is why it’s particularly shit at “vibe coding”

That being said, as a long-time script kiddie, I’d probably give it a go to make some BS project for my raspberry Pi work. Just nothing of any significance to the world.

It really is amazingly bad, and people don’t even realize it. I googled to get a picture of something this morning, and the AI-generated summary that came up, on a topic I happen to know a lot about, was flat out WRONG. Clicking on the “deep dive” button generated something that was a lot closer to correct. Pretty much anytime I get one of those summaries for something I know a lot about, stuff is obviously wrong. So I don’t trust anything I DON’T know in depth. You don’t know what you don’t know.

The thing that’s wild about those AI-generated summaries is that they often include links to reasonably accurate information that the AI has just flat-out ignored. Because, as always, AI doesn’t understand anything. That’s not what it does.

My favorite AI test was when they had one play Pokemon. It got stuck in a town because it kept trying to leave via the predicted exit and it never found . One of the researchers suggested the AI was struggling because it couldn’t parse the simplified imagery of the game. Which is a pretty wild excuse to offer: “my pattern recognition machine doesn’t recognize what’s going on because these patterns are too simple.”

So uhh if I shut down my engine because I forgot to add oil after changing it it will restart after I pop the hood. Interesting choice.

I like the Maverick idea more, hell just give us a red LED light under the bonnet.

If the car is off your engine won’t turn on… it’s only applicable when the vehicle is turned on

With these modern cars it can be tough to know if you have turned the car off or not. Really, I am serious the infotainment systems can stay on after you push the on/off button so you are not sure if the car is on or off, so you hit the on/off button again… and maybe you turned the car off, maybe you turned it back on…

We have an ID.Buzz now and good lord most of the time when it’s parked I have no clue if it’s on or off. But at least there are no belts to rip my hand off (just watch out for the orange cables!)

One of the more unsettling aspects of modern devices for me is the lack of physical, intuitive on/off indication, which is worse for some devices where I’d really like provable indication of whether it’s powered on.

I can tell that my car is off because the key isn’t in the ignition cylinder, and I would be able to tell that even if it was an EV, and even if I wasn’t anywhere near the car, by knowing the whereabouts of the key.

I can tell that my headlights are off because my physical gauges do not light up if they’re not on. This is my problem with screens-as-gauges, the screen must always be lit to show any information at all, and that contributes to countless clueless people driving around with their lights off at night.

On a more tinfoil-hat level, I know that my phone’s camera and microphone are always on, and there’s pretty much nothing I can do about that. I can turn off any permissions I want, disable the voice assistant, disable active brightness control, and it’ll still be passively recording, and perhaps broadcasting. That also applies to car computers, you can’t turn off just the module that sells your information to Lexis Nexis because it’s just a line of software embedded and encrypted deep into the computer that also runs your engine, brake-by-wire and airbags.

I will never know, until a SWAT team kicks down my door for divulging the top-secret information that old roadsters are a more responsible buy than their hard-top counterparts because garage-kept, regularly-washed examples are the rule, not the exception, and fetch a much lower price.

I read some years ago that a handful of people had been killed by CO poisoning because they unintentionally left cars running in their attached garages. In those cases I believe push button start was blamed—you can grab your keys and go inside without shutting off the car. But presumably hybrid and stop-start introduce a similar risk.

CO detectors, people! I take one with me when I travel too.

My understanding is that unless you have an exhaust leak (before the catalytic converter) modern cars do not produce enough CO to kill you.

I guess the old “disconnect the battery before working on the vehicle” suggestion that we all ignore*, might be more relevant these days.

*(not for working on the electrics obviously, but if you’re just changing the oil…)

We didn’t teach a rock to think, but it turns out to do things that people have explained a billion times already how to do, you don’t need to think, you just need to know how to look up those things and summarize them.

The generic “ai is bad” stuff is so 2023. I understand why people think it’s bad, and why people think it’s good, it’s time to move onto other blog templates.

My dude, if you ask ChatGPT-5 which states include the letter “R”, it will include Indiana, Illinois, Texas, Massachusetts, and/or Minnesota.

Hell, you can literally convince ChatGPT-5 that Oregon and Vermont do not have an “R” in their names.

On average, GPT models will still answer questions incorrectly 60% of the time.

AI is crap.

https://gizmodo.com/chatgpt-is-still-a-bullshit-machine-2000640488

https://futurism.com/study-ai-search-wrong

So what you’re saying is, 60% of the time it works every time.

https://c.tenor.com/gMN1vJ8ILUwAAAAd/tenor.gif

The problem is that you have to do your homework to identify when it is correct vs incorrect. At that point, you might as well have done the task yourself!

^^^ This. Plus it’s pretty close to why I’ve never liked ‘autopilot’ in cars. When the computer freaks out and hands control back to you, you have to be awake and ready to react right away, so you might as well be driving yourself at that point.

If the car is always beeping and vibrating at me, how will I know when it’s a real emergency? Can’t I just drive my car?

Okay, I’m off to call the dealer and find out how to turn off the “slow down on a highway curve when using cruise control” feature. Really? You want to unload my tires going into a curve at 70mph just to make sure I’m paying attention? I turned off active lane assist, you stupid hunk of chips!!! (I yell at our new car a lot, lately.)

Exactly. A month or two back, my boss sent me a draft risk assessment for one of our pieces of equipment that he had ChatGPT come up with. Asked me to look it over.

It was so full of errors and irrelevant crap that I ended up having to rewrite the entire thing from scratch. Which meant teaching myself how to write risk assessments.

The problem is and will remain that LLMs hallucinate. Some do that a lot. Even with RAG and tool access, they can’t be trusted for either precision or recall. And they’re very good at putting together convincing text from prompts and context, and very suggestible, so it’s almost trivially easy to make them produce not just erroneous but dangerous content.

The problem with LLMs is the same problem with Tesla’s “full self-driving.” It’s very good and very impressive, and so people get complacent and just trust it to be right or do the right thing. But sometimes it fails, spectacularly, and when you build systems to make decisions or actually do things IRL based on input from an LLM (or FSD), very bad things happen. People die. That’s not an exaggeration.

“ People die. “

And then after that happens, if you leave it to the AI, there will be a BBQ cookup… like in the movie Fried Green Tomatoes…

Manwich and Smokehouse Brisket having a conversation with BBQ in it …

I know what I want to eat this weekend.

Except that it doesn’t always look up things before summarizing them. Or verify the thing it looked up is reliable.

Actually, it’s more likely to grab incorrect than correct info.

The “vibe coders” have learned this the hardest, because programming is unforgiving. A single error collapses it all, so it is more prone to showing AI’s faults.

We take the AI subject seriously because it has invaded our industry, and not for the better. The problem with AI is that it doesn’t understand context or nuance. It’s basically running a web search and spitting out the first result that vaguely matches the question you asked it. And if it cannot find an answer at all, it just confidently makes something up and presents it as fact. It’s bad data in and worse data out.

It’s fascinating to watch in this industry. You cannot even trust Google’s AI to give you accurate horsepower figures, even though spec sheets for pretty much every new car are readily available. You just have to know where to find them and know the difference between trim levels. Since AI doesn’t “understand” it cannot do that.

Some prominent car websites are using AI for research, and it shows in the quality of their work. One website that I will not name recently published an article that claimed with confidence that shifting your manual car above 2,500 RPM is bad because it “reduces” engine performance. Huh?

On the other hand, if it was an article about 100-year-old tractors with peak torque at 550rpm…

I was looking up the correct hydraulic fluid to use in my pump, and while the AI response did include the correct types, it also included DEXRON, which is explicitly forbidden in the manual for the pump. It’s absolutely clueless.

Even if AI did do things correctly 100% of the time (and it very much doesn’t) it uses way too many natural resources just to run. AI data centers are using up a massive amount of potable water and expelling tons of pollution in the areas in which they’re situated, which tend to be, coincidentally, near poorer neighborhoods, thereby impacting the health of the people who live there. AI is essentially a less efficient, stupider version of a search engine. It’s only gotten so popular because it allows people to be lazier at the expense of actually doing almost anything right. And that’s not to mention all the intellectual property and copyright violations.

Google has gotten so much worse since they started leaning on AI to populate their search results. To the point where I think Duck Duck Go might actually be the better search engine these days, rather than a lesser one that you only use if you want Google to not know absolutely everything about you.

I actually switched my browser on my laptop and phone to DuckDuckGo earlier this year for both reasons: so that Google would stop knowing everything about me and because their search engine sucks now. Although I used Google for so long that I’m sure they already know everything, and DDG still has a few tweaks to work out before its search engine is as good as Google was say, 10 years ago