I think one of the things about getting cars to drive themselves that seems to be consistently overlooked by many of the major players in the space is just how much of the driving task has nothing to do with the technical side of things. Sure, the technical side is absolutely crucial, and the problem of how to get a car to interpret its surroundings via cameras, lidar, radar, whatever, and then use those inputs to construct a working model with reasonable actionable moments at over a mile a minute or more is absolutely impressive. But that’s only part of the equation when it comes to driving, especially driving among humans. There’s all sorts of cultural and environmental and other complex and often fuzzy rules about driving among human activity to consider, and then there are the complicated concepts of intent and all the laws and the strange unwritten rules – driving is far more complex than the base mechanics of it.

All of this is to say that Tesla’s re-released Full Self Driving (FSD) and its new mode known as Mad Max is something interesting to consider, mostly because it is software that is designed to willfully break the law. I’m not sure what other products are out there in the world that are like this; sure, you can buy a knife at Dollar Tree and commit any number of crimes with it, but the knife doesn’t come from the factory with the ability to commit crimes on its own, except perhaps for loitering.

Oh, and as an aside, Tesla’s icon for the Mad Max mode feels a little misguided. Let me show you:

That little guy with the mustache and cowboy hat doesn’t really look like what we think Mad Max (lower left) looks like; it looks much more like an icon of Sam Elliott (lower right). Unless they mean some other Mad Max? Maybe one that won’t get them in trouble with Warner Bros?

Anyway, back to the Mad Max speed profile itself. Among Tesla fans, the new mode seems to make them quite excited:

Tesla FSD V14.1.2 Mad Max mode is INSANE.

FSD accelerates and weaves through traffic at an incredible pace, all while still being super smooth. It drives your car like a sports car. If you are running late, this is the mode for you.

Well done Tesla AI team, EPIC. pic.twitter.com/Z1lFB1jCrR

— Nic Cruz Patane (@niccruzpatane) October 16, 2025

Tesla’s Mad Max Speed Profile for their latest release of FSD V14.1.2 is actually programmed with the ability to break laws, specifically speeding laws. Here’s how Tesla’s release notes describe the new profile (emphasis mine):

FSD (Supervised) will now determine the appropriate speed based on a mix of driver profile, speed limit, and surrounding traffic.

– Introduced new Speed Profile SLOTH, which comes with lower speeds & more conservative lane selection than CHILL.

– Introduced new speed profile MAD MAX, which comes with higher speeds and more frequent lane changes than HURRY.

– Driver profile now has a stronger impact on behavior. The more assertive the profile, the higher the max speed.

The description doesn’t specifically say it’ll break any laws, but in practice, it definitely does. Here’s a video from well-known Tesla influencer-whatever Sawyer Merritt where you can clearly see the Tesla hitting speeds up to 82 mph:

Those 82 miles per hour occurred in a 55 mph zone, as the Tesla itself knows and is happy to display on its screen:

Why am I bringing this up? It’s not because I’m clutching any pearls about speeding (I don’t even have pearls, at least not real ones, I only have testicles to clutch as needed), because it’s no secret that we all do it, and there’s often a “folk law” speed limit on many roads where people just sort of come to an unspoken agreement about what an acceptable speed is. But that doesn’t mean it’s not breaking the law, because of course it is. And when you or I do such things, we have made the decision to do so, and we are flawed humans, prone to making all manner of bad decisions, or even just capable of being unaware, which, of course, ignorantia legis neminem excusat.

But this Tesla running FSD (supervised) is a different matter. FSD is, of course, a Level 2 system, which means it really isn’t fully autonomous, because it requires a person to be monitoring its actions nonstop. So, with that in mind, you could say the responsibility for speeding remains on the driver, who should be supervising the entire process and preventing the car from speeding, at least technically.

But maybe the driver is more of an accomplice, because the car knows what the speed limit is at any given moment; it’s even displayed onscreen, as you can see above. The software engineers at Tesla have this information and could have put in the equivalent of a command like (if they were writing the software in BASIC) IF CURRENTSPEED>SPEEDLIMIT THEN LET CURRENTSPEED=SPEEDLIMIT, but they made a deliberate decision not to do that. Essentially, they have programmed a machine that knows what the law is, and yet chooses to break it.

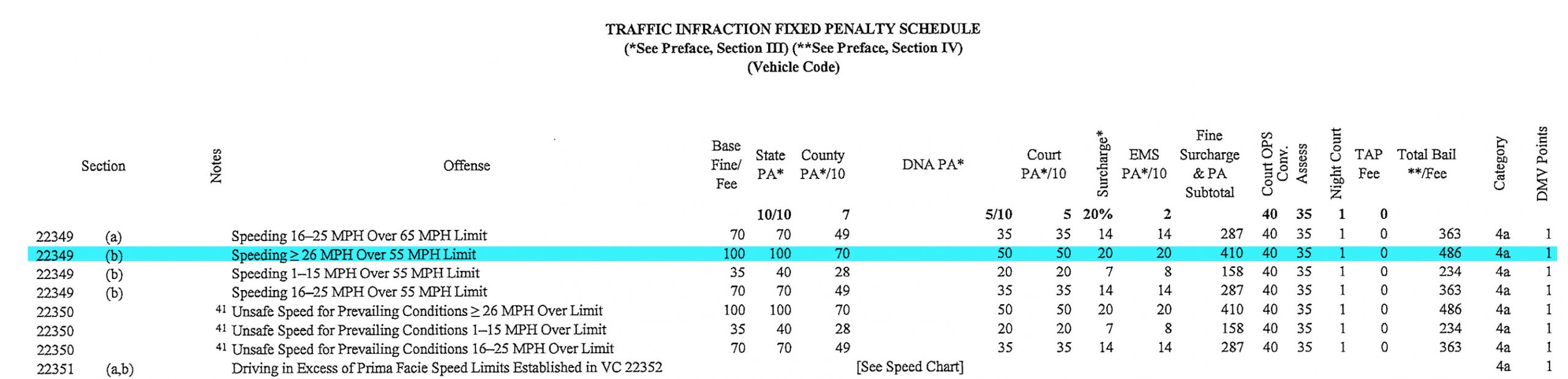

It’s not even really that minor infraction, what we see in the example here; Merritt’s Tesla is driving at 27 mph over the stated speed limit, and if we look at, say, the Marin County, California Traffic Infraction Penalty Schedule, we can see how big a deal this speed-crime is:

I’m sure that’s impossibly tiny if you’re reading on a phone, so I’ll just tell you what it says: for going more than 26 mph over a 55 mph speed limit, it’s a total fine of $486 and a point on your license. That’s a big speeding ticket!

So, does this make sense? Even if we all speed on highways at times, that’s very different than a company making and selling a product that is able to or at least willing to assist in violating a known law. Again, this is hardly high treason, but it is a crime, and it’s a crime perpetrated by a machine, with the full blessing and support of the parent company. There are tricky ethical issues embedded in this that we really should be thinking about before we start mass deploying 5,000-pound machines with a healthy disregard for the law into the public.

Does the fact that this software willingly breaks traffic laws affect Tesla’s potential liability if anything should go wrong? I’m no lawyer, but it’s hard to see how it wouldn’t come up if a car running FSD in the Mad Max speed profile were to get into a wreck. Why are decision makers at Tesla willing to put themselves at risk this way? It seems like such a huge can of worms that you would think Tesla would rather not open, right?

If someone gets pulled over for speeding while using this system, can they reasonably claim it wasn’t their fault but was the fault of the car? It’s significantly different than a car on cruise control, because those systems require the driver to actively set the maximum speed; in this case, FSD decides what speed to drive. But the person did choose a speed profile that could speed, so are they responsible? Or does it come back around to the fact that speeding is possible at all in that profile?

This is complicated! Cars have always had the ability to break laws if driven in ways that didn’t respect the law, but this is beyond just an ability. This is intent. The fact that the software knows it can break a law almost makes it premeditated.

I’m not saying this is a terrible thing that must be stopped, or anything like that. I am saying that this software is doing something we’ve never really encountered before as a society, and it’s worth some real discussion to decide how we want to incorporate this new thing into our driving culture.

It’s up to us; just because these cars can break speed limits doesn’t mean we have to just accept that. We humans are still in charge here, at least for now.

By your logic any sort of adaptive cruise control shouldn’t let you speed. You the driver are selecting the mode, setting the illegal speed, and therefore responsible for whatever happens behind the wheel. No different than using FSD or similar autonomous modes while intoxicated.

Much like emissions systems, safety systems should always perform as well as they do under testing conditions, lest it become a major incident with a name ending in “gate” and consequences including irreversible brand damage. That means an “autonomous” driving system should drive at all times in such a way that it would score 100 out of 100 points on a driving license exam, no exceptions.

If automakers want to be able to program their cars to roll through a stop sign, they can lobby the government to legalize that behavior, or they can cope. Our society’s stance is that traffic laws exist for human safety, and regardless of whether I personally agree about their effectiveness, that is their legal purpose.

All automated systems are robots, and robotics has laws.

The first law of robotics is that a robot may not harm a human being or allow a human being to be harmed through inaction. Ignoring or subverting rules ostensibly created to safeguard human lives is in direct conflict with that law.

The second law of robotics is that a robot must obey orders given to it by a human when not in conflict with the first law. Taffic laws are orders given to drivers. A robot that drives is a driver, and as such, ignoring traffic laws is also in conflict with the second law.

The third law of robotics, that a robot must protect itself unless in conflict with the first two laws, is less relevant here, seeing as a car endangering itself is the same as endangering its occupants, but is still violated by a robot that deliberately circumvents safety procedures.

I’m ok with this. The FSD isn’t full self driving. It’s more like really good cruise control. If you want to set your cruise control to 82, then so be it.

Why did we get stuck in the worst timeline? This feature is why we can’t have nice things.

The first time someone running Mad Max creams a pedestrian into oblivion, or destroys another vehicle, or even hops a curb and self-selects, WB should sue Tesla into oblivion.

“Ludicrous Mode” and “Plaid” were borderline cringe and frankly at this point damaging to the Spaceballs IP, but have probably been in use too long for legal action to happen. The more that Tesla appropriates goodwill from popular media, more more consequences they should face when it blows up in their face.

This is not complicated this is insane. Given the FSD’s record for just driving normally and driving into things, attempting lane changes in oncoming traffic and other very questionable maneuvers do we really want this thing doing high speed precision driving? Given FSD’s track record (pardon the pun) I’d vote no, but there are a whole host of Tesla FSD fans that would vote yes. These people would likely also let the system go hands free. I am shooting down the argument that the system won’t let you right now because I have seen the cheats on the internet. The driver “monitoring” would have to be right there to correct the FSD trying to change lanes into a gap that is too small for the car to fit at 25+ miles over the limit and the fraction of a second lost to grab for the wheel may be all it takes for disaster.

I don’t really understand the problem. Apart from the poor choice of naming.