I think one of the things about getting cars to drive themselves that seems to be consistently overlooked by many of the major players in the space is just how much of the driving task has nothing to do with the technical side of things. Sure, the technical side is absolutely crucial, and the problem of how to get a car to interpret its surroundings via cameras, lidar, radar, whatever, and then use those inputs to construct a working model with reasonable actionable moments at over a mile a minute or more is absolutely impressive. But that’s only part of the equation when it comes to driving, especially driving among humans. There’s all sorts of cultural and environmental and other complex and often fuzzy rules about driving among human activity to consider, and then there are the complicated concepts of intent and all the laws and the strange unwritten rules – driving is far more complex than the base mechanics of it.

All of this is to say that Tesla’s re-released Full Self Driving (FSD) and its new mode known as Mad Max is something interesting to consider, mostly because it is software that is designed to willfully break the law. I’m not sure what other products are out there in the world that are like this; sure, you can buy a knife at Dollar Tree and commit any number of crimes with it, but the knife doesn’t come from the factory with the ability to commit crimes on its own, except perhaps for loitering.

Oh, and as an aside, Tesla’s icon for the Mad Max mode feels a little misguided. Let me show you:

That little guy with the mustache and cowboy hat doesn’t really look like what we think Mad Max (lower left) looks like; it looks much more like an icon of Sam Elliott (lower right). Unless they mean some other Mad Max? Maybe one that won’t get them in trouble with Warner Bros?

Anyway, back to the Mad Max speed profile itself. Among Tesla fans, the new mode seems to make them quite excited:

Tesla FSD V14.1.2 Mad Max mode is INSANE.

FSD accelerates and weaves through traffic at an incredible pace, all while still being super smooth. It drives your car like a sports car. If you are running late, this is the mode for you.

Well done Tesla AI team, EPIC. pic.twitter.com/Z1lFB1jCrR

— Nic Cruz Patane (@niccruzpatane) October 16, 2025

Tesla’s Mad Max Speed Profile for their latest release of FSD V14.1.2 is actually programmed with the ability to break laws, specifically speeding laws. Here’s how Tesla’s release notes describe the new profile (emphasis mine):

FSD (Supervised) will now determine the appropriate speed based on a mix of driver profile, speed limit, and surrounding traffic.

– Introduced new Speed Profile SLOTH, which comes with lower speeds & more conservative lane selection than CHILL.

– Introduced new speed profile MAD MAX, which comes with higher speeds and more frequent lane changes than HURRY.

– Driver profile now has a stronger impact on behavior. The more assertive the profile, the higher the max speed.

The description doesn’t specifically say it’ll break any laws, but in practice, it definitely does. Here’s a video from well-known Tesla influencer-whatever Sawyer Merritt where you can clearly see the Tesla hitting speeds up to 82 mph:

Those 82 miles per hour occurred in a 55 mph zone, as the Tesla itself knows and is happy to display on its screen:

Why am I bringing this up? It’s not because I’m clutching any pearls about speeding (I don’t even have pearls, at least not real ones, I only have testicles to clutch as needed), because it’s no secret that we all do it, and there’s often a “folk law” speed limit on many roads where people just sort of come to an unspoken agreement about what an acceptable speed is. But that doesn’t mean it’s not breaking the law, because of course it is. And when you or I do such things, we have made the decision to do so, and we are flawed humans, prone to making all manner of bad decisions, or even just capable of being unaware, which, of course, ignorantia legis neminem excusat.

But this Tesla running FSD (supervised) is a different matter. FSD is, of course, a Level 2 system, which means it really isn’t fully autonomous, because it requires a person to be monitoring its actions nonstop. So, with that in mind, you could say the responsibility for speeding remains on the driver, who should be supervising the entire process and preventing the car from speeding, at least technically.

But maybe the driver is more of an accomplice, because the car knows what the speed limit is at any given moment; it’s even displayed onscreen, as you can see above. The software engineers at Tesla have this information and could have put in the equivalent of a command like (if they were writing the software in BASIC) IF CURRENTSPEED>SPEEDLIMIT THEN LET CURRENTSPEED=SPEEDLIMIT, but they made a deliberate decision not to do that. Essentially, they have programmed a machine that knows what the law is, and yet chooses to break it.

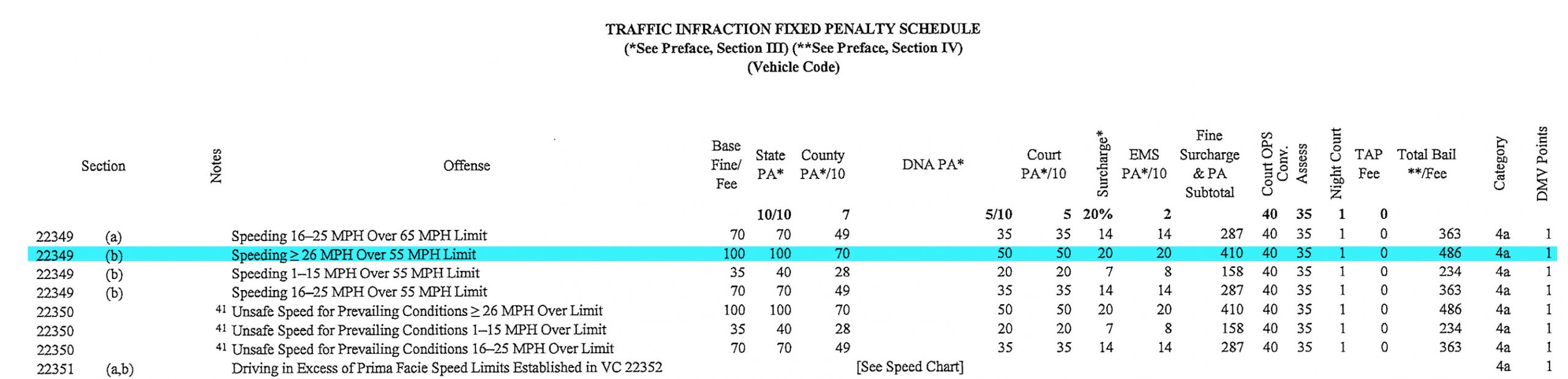

It’s not even really that minor infraction, what we see in the example here; Merritt’s Tesla is driving at 27 mph over the stated speed limit, and if we look at, say, the Marin County, California Traffic Infraction Penalty Schedule, we can see how big a deal this speed-crime is:

I’m sure that’s impossibly tiny if you’re reading on a phone, so I’ll just tell you what it says: for going more than 26 mph over a 55 mph speed limit, it’s a total fine of $486 and a point on your license. That’s a big speeding ticket!

So, does this make sense? Even if we all speed on highways at times, that’s very different than a company making and selling a product that is able to or at least willing to assist in violating a known law. Again, this is hardly high treason, but it is a crime, and it’s a crime perpetrated by a machine, with the full blessing and support of the parent company. There are tricky ethical issues embedded in this that we really should be thinking about before we start mass deploying 5,000-pound machines with a healthy disregard for the law into the public.

Does the fact that this software willingly breaks traffic laws affect Tesla’s potential liability if anything should go wrong? I’m no lawyer, but it’s hard to see how it wouldn’t come up if a car running FSD in the Mad Max speed profile were to get into a wreck. Why are decision makers at Tesla willing to put themselves at risk this way? It seems like such a huge can of worms that you would think Tesla would rather not open, right?

If someone gets pulled over for speeding while using this system, can they reasonably claim it wasn’t their fault but was the fault of the car? It’s significantly different than a car on cruise control, because those systems require the driver to actively set the maximum speed; in this case, FSD decides what speed to drive. But the person did choose a speed profile that could speed, so are they responsible? Or does it come back around to the fact that speeding is possible at all in that profile?

This is complicated! Cars have always had the ability to break laws if driven in ways that didn’t respect the law, but this is beyond just an ability. This is intent. The fact that the software knows it can break a law almost makes it premeditated.

I’m not saying this is a terrible thing that must be stopped, or anything like that. I am saying that this software is doing something we’ve never really encountered before as a society, and it’s worth some real discussion to decide how we want to incorporate this new thing into our driving culture.

It’s up to us; just because these cars can break speed limits doesn’t mean we have to just accept that. We humans are still in charge here, at least for now.

It is my personal opinion that all “automated” driving systems should obey traffic laws. Fully stop at all stop signs, obey speed limits, etc. Humans can make the conscious decision on when it is appropriate to disobey them (or think they can), but the machine shouldn’t be able to be programmed to disobey laws.

Either that or the operator accepts an automated traffic fine for setting their AI to disobey the law, I might be on board with one of those Scandinavian fines being automatically applied to an AI being programmed to break the law.

Does it have safe guards for school zones or around school buses? Not sure I want to know what little Timmy would look like after getting blasted by a wrapped Tesla X going 60 around a bus

You should look up the Automated Emergency Braking and Collision Avoidance tests and come back and tell us which car does best.

A worthy successor to the Nissan Altima is born!

I don’t want to be that guy (but I’m totally going to be him)

But I think you have the variables the wrong way around in the second half of your code?

In Visual Basic for Applications in Excel (The IDE of Kings) you’d do something like the below:

I stopped to think about it too, but I believe he’s saying it should set CurrentSpeed to be equal to the value of SpeedLimit. As in, check if you’re currently going faster than the speed limit and if so, change your speed to match the speed limit.

Your version would change SpeedLimit to match CurrentSpeed.

I think. I’m actually a mechanical engineer, so it’s very possible I’m wrong.

Yeah, I mis-read the article. I thought he was trying to posit how Tesla might have just made it do whatever it liked. My bad!

I’m a software dev… yes you are correct. However, if this was Visual Basic, some knucklehead would also add “on error goto next” (Google that if you’re not familiar with it). I once had to debug an app that had a that code littered throughout the app

If you want to switch off the autopilot and floor it that’s one thing, and sometimes driving the speed limit makes you a roadblock (I85 around Charlotte comes to mind), but I think cars should drive themselves like they’re trying to pass their license test. Go fast or let the car drive, one or the other.

Oh, so we’re outsourcing our anti-social behavior to the computer! What are you doing that you need the car to violate multiple traffic laws for you. Like, our dudes just liking Facebook memes in traffic thinking, “You know, I wish everyone in my greater metro area would consider me nuisance to society. But I can’t take my eyes off the phone for more than thirty seconds!”.

Let’s take a group of frequently distracted, unskilled operators of heavy, 400+ horsepower vehicles and increase their relative speed. What could go wrong?

Eh, just carry a few extra bottles of that Mountain Dew crap along with you.

Just in case…

I want a list of all the “folk law” speed limit, when what people actually drive is so far removed from what the legal speed limit is. My best example is I80 in Indiana which I feel like is 55 for some stretches by the Illinois border but we all definitely go 80

Too many to list. However I have found one highway where the speed limit is reasonably obeyed I-15 in southern Utah is 80mph, and a majority of the traffic is around that speed.

I happen to know that in at least one state all highways are designed for 103mph traffic but the max limit is 75mph, go figure.

Highway 100 in Minnesota has a speed limit of 60 mph but everyone’s going 70/75 minimum. It’s only two lanes for most of it as well so the left lane is usually going at least 80.

Oh yes, I’m in Southern MN so visit that stretch of road often. Fully agree

I-80 here in Northern California is also 80 mph, assuming traffic allows it. (It’s posted at 65).

When I lived in Sac the saying was “80 on 80”. If you weren’t doing it, you had best get out the way!

State Highway 67 in Decatur, Alabama (where I live) has about a three-mile stretch where the speed limit is 50 but everyone – including police – goes at least 65 and doesn’t bat an eye. It’s like the entire city has decided 50 is just too slow and doesn’t care what the state says.

I thought this was another Torch jokey article. HOLY SHIT, this is real. WTF is going on.

Maybe Elon & Don have kissed and made up enough that Tesla feel even more emboldened to do whatever the hell they want without fear of consequences.

The government seems to be more interested in persecuting law-abiding citizens than prosecuting law-breaking corporations.

I don’t think they’re officially back together, they’re just trying to be friendly for the sake of their respective kids, Eric took the breakup especially hard. They might try to do a weekend together in Florida this winter and see if anything develops

Corporate America: “yay, two Christmases!”

We should come up with a celebrity couple name for shorthand. Elonald?

The Mumps

I think RFK already took that one.

Can I sue them for misappropriation of my name?

Depends. Are you…. mad?

Very

It’s not the first time they released an FSD update that was designed to break the law. Remember when they released a mode that allowed the Tesla to do the California stop (aka the rolling stop)?

That said, as someone who has no money for a car at the moment and relies on walking and public transit to get around, I am really uncomfortable with this. I’m already not happy about being made into a test dummy without my consent, and now I’ve got to worry about a driver using FSD and having their Tesla driving towards me at 20+ mph over the speed limit? If that car hits me, I don’t have a metal cage protecting me and absorbing the energy from the impact; I’m going to lose. How is this allowed? (I say, while knowing that laws are only as useful as the government’s willingness to enforce them.)

“– Driver profile now has a stronger impact on behavior. The more assertive the profile, the higher the max speed.”

I find it alarming that if you drive like an asshole, this mode will drive like more of an asshole. I was really hoping this would be a joke at some point, but it isn’t. Of course it isn’t.

Is $486 really an oppressive fine for someone who can afford a Tesla? More like pocket change, I’d say.

Guess it depends on what year, New? Definitely. A 8 year old one with well over 150k miles? Could have just been cheap transportation for a commuter.

I am sitting here like the Michael Jackson popcorn meme, just waiting for this to go awry and them getting sued.

Musk did insist on some safeguards. It doesn’t really take off until it hits 14 and it won’t go higher than 88.

Me at first: “hehe 88mph, funny back to the future meme”

Me, a moment later: “and now I’m sad”

Needs a “swim mode”.

The should have called it “Altima Mode”.

Only available if there’s a temporary spare on the drive axle and half the bumper cover clips broken/missing.

Also needs at least one window made out of a garbage bag and duct tape

Pink fuzzy steering wheel cover and script “Blessed” sticker on trunk recommended, but not required

That unlocks at 185k miles and at least three hits on the Carfax.

If you have a full self-driving mode it has to be required to follow the speed limit. Full stop. Nothing else is acceptable. You can’t have a machine that willfully breaks the law, it’s a litigation nightmare.

I know this isn’t FSD despite what Tesla wants people to believe, but that’s what they’re working towards (ostensibly) so they should be working with that in mind.

I am curious to see if it will do this in a school zone as well.

Marcus Brownlee at some point in the future: “No, officer, I wasn’t going 95 in a 25mph school zone, the Tesla did it.”

The Mad Max they’re referring to is Jordan Belfort’s dad, as much like everything in The Wolf of Wall Street, Tesla is primarily a financial fraud scheme.

This means at some point a group of Tesla employees sat around a table and decided the *correct* speed in a 55 was 82 (rather than 74 or 98 etc). I wonder how they picked that number.

I assume it’s a significant number for either a drugs thing or a sex thing.

Nice.

Terrible idea, but it doesn’t do it unless the operator commands it. I can set cruise at 20 over the limit in my Corolla and will do it happily while showing a little red image of whatever the speed limit actually is.

It’s an interesting discussion. You could make the same argument with an existing cruise control – why can I set it to > than existing speed limits?

I mean kind of. The driver choosing to do it is a lot different than the shitty ai that is supposed to drive the car.

honestly speed limits in general are interesting. It’s basicallly a law that

1) virtually nobody obeys

2) there’s virtually no enforcement (within reason)

3) If you actually follow the law the rest of society will be pissed at you

I struggle to think of another “law” in society that’s treated this way.

It’s worse. In regards to #2, it’s not enforced unless the officer is profiling and needs a (legal) pretext for making the stop.

I wouldn’t say any of that applies to the UK any more.

In a few hours I can drive to legal roads with no upper speed limits. Seems fine to allow my car to use its cruise control there.

Well, for one, none of my cars know what the speed limits are on the roads I’m driving on…

Because cruise control is just a PID, it’s a singular function of a manually-operated machine. Its only job is to do what the driver tells it to. Meanwhile, ADAS acts without driver input beyond “on”, and breaks the law of its own volition.

If I have a regular blender that happens to be powerful enough to blend plastic, and I fill it with lithium-polymer batteries before turning it on, the fact that the blender keeps going until it melts in the ensuing house fire is my fault, and only my fault, because the machine has only done one thing, exactly what I told it to, with no decision-making on the machine’s part.

However, if the ghost of SEARS sells me an autonomous air fryer that finds its own ingredients, and it comes with an “Inglorious Basterds” button that causes it toInglorious Basterds Plot Point seek out and ignite nitrocellulose film

Likewise, if I have a car with dumb cruise control, the manufacturer has no way of knowing whether speed limits will be raised in the future, or what the user might need it to do. This is not a task that is expected of the developer of the device, because that is up to the driver.

An ADAS system, however, is itself a driver, and the developers of the system are (if not legally, at least ethically) liable for its actions. The person or company that programs a self-driving system to drive 15mph over the limit, and rolls out that software to 2.8 million cars, is effectively driving those 2.8 million cars.

If it were any company other than Tesla, I’d be surprised by this bravado/foolishness, given the various pending federal investigations and lawsuits about FSD accidents over the years. Presumably, Elon felt having “Mad Max” mode was worth whatever aggravation/legal exposure that it might cause.

I can’t help but wonder what the equivalent attitude might look like when Tesla becomes a company that mostly sells humanoid robots like Elon predicts. Will those robots sometimes be able to violate Asimov’s Three Laws if the user/owner clicks a checkbox in their settings to do so?

Only if Elon allows it on that robot because its near someone who was mean to him on Twitter (i.e. me)

This assumes they’ll even be programmed with the three laws in the first place.

Much cheaper to not bother with the three laws and just have the owner agree it’s all their fault in the small print somewhere.

Silly updates are cheaper than actually improving the cars Maybe he is going for the VW beetle record of staying ugly for decades.

FSD already breaks Three Laws, so I suspect Optimus will be a real treat for fans of Terminator.

An excellent point. Of course it does.