Artificial Intelligence (AI) technology is a powerful tool, but like many powerful tools, it has the potential to allow humans to let our natural abilities atrophy. It’s the same way that the invention of the jackhammer pretty much caused humans to lose the ability to pound through feet of concrete and asphalt with our bare fists. We’re already seeing effects of this with the widespread use of ChatGPT seemingly causing cognitive decline and atrophying writing skills, and now I’m starting to think advanced driver’s aids, especially more comprehensive ones like Level 2 supervised semi-automated driving systems are doing the same thing: making people worse drivers.

I haven’t done studies to prove this in any comprehensive way, so at this point I’m still just speculating, like a speculum. I’m not entirely certain a full study is even needed at this point, though, because there are already some people just flat-out admitting to it online, for everyone to see, free of shame and, perhaps, any degree of self-reflection.

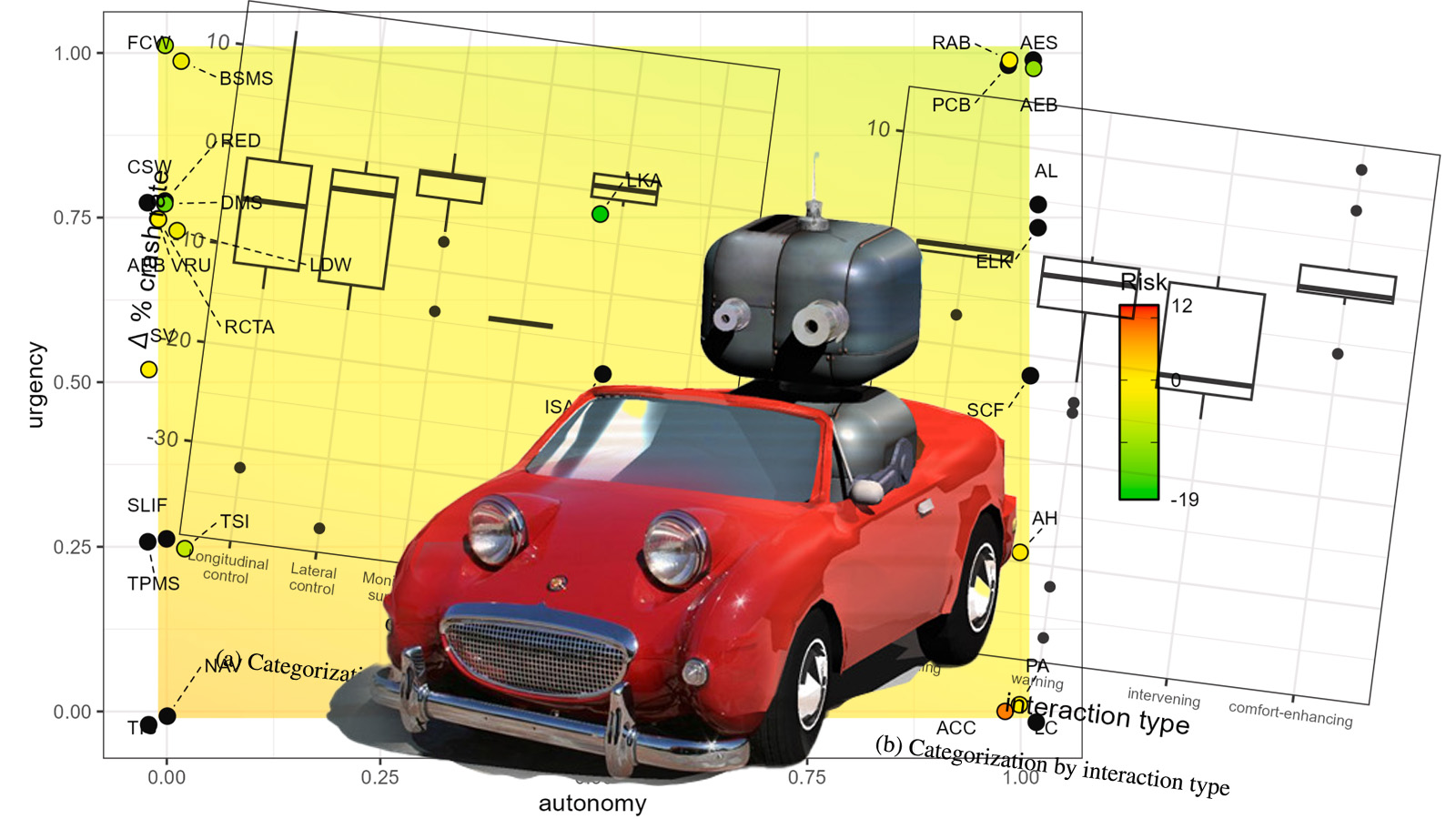

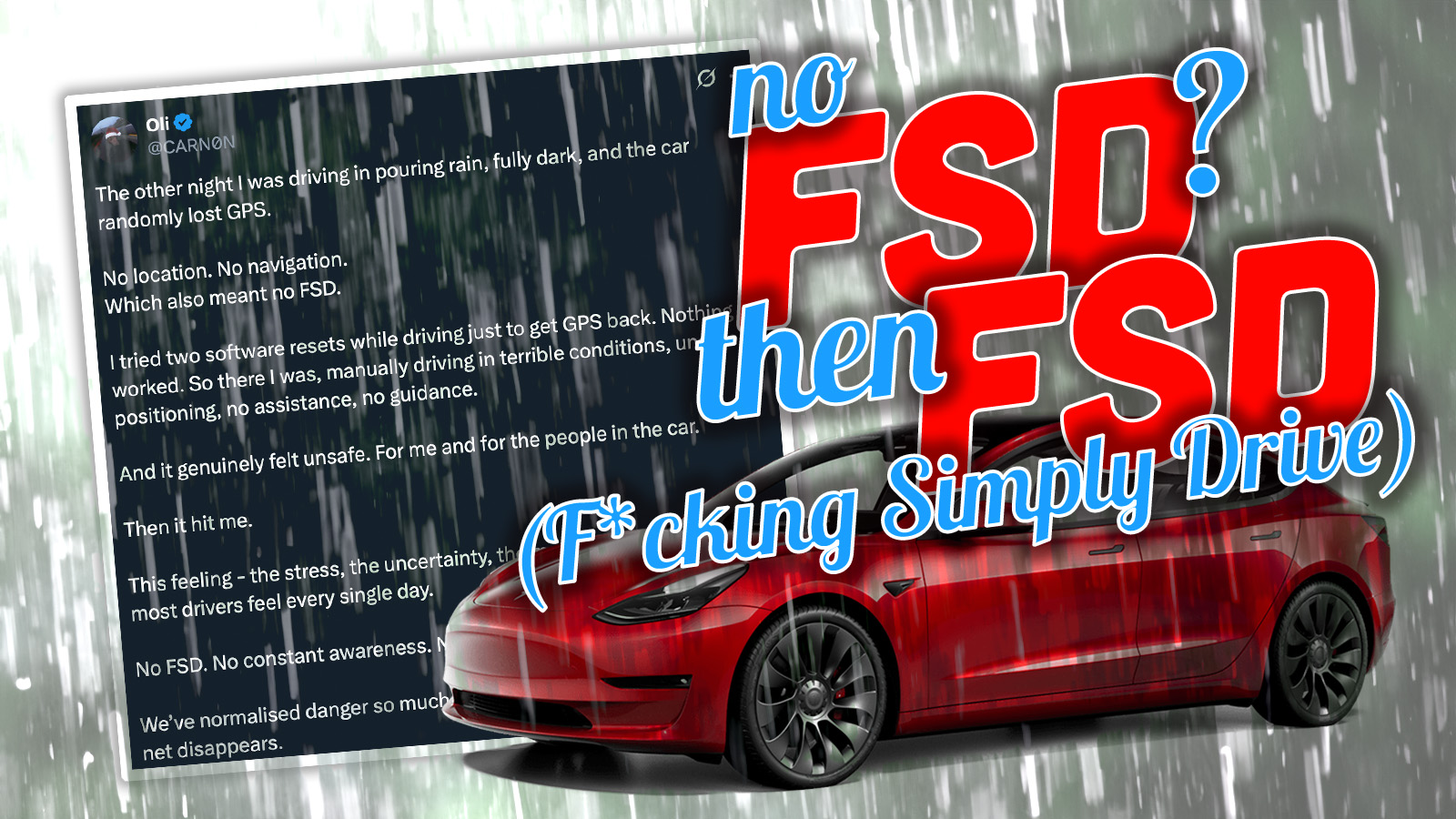

Specifically, I’m referring to this tweet that has garnered over two million views so far:

The other night I was driving in pouring rain, fully dark, and the car randomly lost GPS.

No location. No navigation.

Which also meant no FSD.I tried two software resets while driving just to get GPS back. Nothing worked. So there I was, manually driving in terrible… pic.twitter.com/hKlU6AlCEZ

— Oli (@CARN0N) December 13, 2025

Oh my. If, for some reason, you’re not able to read the tweet, here’s the full text of it:

“The other night I was driving in pouring rain, fully dark, and the car randomly lost GPS. No location. No navigation. Which also meant no FSD. I tried two software resets while driving just to get GPS back. Nothing worked. So there I was, manually driving in terrible conditions, unsure of positioning, no assistance, no guidance. And it genuinely felt unsafe. For me and for the people in the car. Then it hit me. This feeling – the stress, the uncertainty, the margin for error – this is how most drivers feel every single day. No FSD. No constant awareness. No backup. We’ve normalised danger so much that we only notice it when the safety net disappears.”

Wow. Drunk Batman himself couldn’t have beaten an admission like this out of me. There’s so much here, I’m not even really sure where to start. First, it’s night, and it’s “fully dark?” That’s kind of how night works, champ. And, sure, pouring rain is hardly ideal, but it’s very much part of life here on Earth. It’s perfectly normal to feel some stress when driving in the dark, in bad weather, but it’s not “how most drivers feel every single day.” Most drivers are used to driving, and they deal with poor conditions with awareness and caution, but, ideally, not the sort of panic suggested in this tweet.

Also, my quote didn’t replicate the weird spacing and short, staccato paragraphs that made this whole thing read like one of those weird LinkedIn posts where some fake thing someone’s kid said because a revelation of B2B best practices, or some shit.

It seems that the reason this guy felt the way he did when the driver aids were removed is that he’s, frankly, not used to actually driving. In fact, if you look at his profile on eX-Twitter, he notes that he’s a Tesla supervisor, which is pretty significantly different than calling yourself a Tesla driver:

This is an objectively terrible and deeply misguided way to view your relationship with your car for many reasons, not the least of which is the fact that even if you do consider yourself a “supervisor” – a deeply flawed premise to begin with – the very definition of Level 2 semi-autonomy is that the person “supervising” has to be ready to take over with zero warning, which means you need to be able to drive your damn car, no matter the situation it happens to be in.

If anything, you would think the takeaway here would have been, shit, I need to be a more competent driver and less of a candy-ass as opposed to coming away thinking, as stated in the tweet,

“We’ve normalised danger so much that we only notice it when the safety net disappears.”

This is so deeply and eye-rollingly misguided I almost don’t know where to start, except I absolutely do know where to start: the idea that the “safety net” is Tesla’s FSD software. Because that is exactly the opposite of how Level 2 systems are designed to work! You, the human, are the safety net! If you’ve already made the arguably lazy and questionable decision to farm out the majority of the driving task to a system that lacks redundant sensor backups and is still barely out of Beta status, then you better damn well be ready to take over when the system fails, because that’s how it’s designed to work.

To be fair, our Tesla Supervisor here did take over when his FSD went down due to loss of a GPS signal, but, based on what he said, he felt “unsafe” for himself and the passengers in the car. The lack of FSD isn’t the problem here; the problem is that the human driver didn’t feel safe operating their own motor vehicle.

Not only was he uncomfortable driving in the inclement weather and lack of light (again, that’s just nighttime, a recurring phenomenon), but the reason he had to debase himself so was because of a technical failure of FSD, which, it should be noted, can happen at any time, without warning. Hence the need to be able to drive a damn car, comfortably.

What does he mean when he says, referring to human driving, “no constant awareness?” Almost every driver I know is constantly aware that they are driving. That’s part of driving. Do people get distracted, look at phones, get lost in reveries, or whatever? Sure they do. That’s not ideal, but it doesn’t mean people aren’t aware.

Unsurprisingly, the poster of this admission has been getting a good bit of blowback in comments from people a little less likely to soil themselves when they have to drive in the rain. So, he provided a follow-up tweet:

To everyone that says I should’ve have a license:

This is a true story – it was pouring rain a couple of weeks ago on a Saturday night, with insane wind making it a recipe for disaster. I had just washed the car and was on my way to pick someone up.

About 30 seconds after… https://t.co/POIA0Ue58n

— Oli (@CARN0N) December 15, 2025

I’m not really sure what this follow-up actually clarified, but he did describe the experience in a bit more detail:

“I knew the rough direction but not exactly. I never use my phone while driving, so 1 rely solely on the car nav. Unfortunately, it wasn’t working, and I had to pull over to double-check where I was going.”

That’s just…driving. This is how all driving was up until about 15 years ago or so. I have an abysmal sense of direction, so I feel like I spent most of my pre-GPS driving life lost at least a quarter of the time I was driving anywhere. But you figure it out. You take some wrong turns, you end up in places you didn’t originally plan to be in, you looked at maps or signs or asked someone and you eventually got there. It wasn’t perfect, but it was what you had, and when we could finally, say, print out MapQuest directions and clip them to the dash, oh man, that was a game changer.

I took plenty of long road trips in marginal cars with no phone and just signs and vague notions to guide me where I was going. If I had to do it today, sure, there would be some significant adapting to exhume my pre-GPS navigational skills – well, skills is too generous a word, so maybe we can just say ability – but I think it could be done. And every driver really should be able to do the same thing.

FSD (Supervised) is a tool, a crutch, and if you find yourself in a position where its absence is causing you fear instead of just a bit of annoyance, you’re no longer really qualified to drive a car. Teslas (and other mass-market cars with similar L2 driver assist systems) don’t have redundant sensors, most don’t have the means to clean camera lenses (or radar/lidar windows and domes), and none of them are rated for actually unsupervised driving. Which means that you, the person in the driver’s seat, need to actually live up to the name of that seat: you have to know how to drive a damn car.

This tweet should be taken as a warning, because while it’s fun to feel all smug because you can drive in the rain and ridicule this hapless fellow, I guarantee you he’s not alone. There are other people whose driving skills are atrophying because of reliance on systems like Tesla’s FSD, and this is a very bad path to go down. Our Tesla Supervisor here may actually have been unsafe when he had to take full control of the car and didn’t feel comfortable. And that’s not a technical problem, it’s a perception problem, and it’s not even the original poster’s fault entirely – there is a lot of encouragement from Tesla and the surrounding community to consider FSD to be far more capable than it actually is.

Driving is dangerous, and it’s good to feel that, sometimes! You should always be aware that when you’re driving, you’re in a metal-and-plastic, ton-and-a-half box hurtling down haphazardly maintained roads at a mile per minute. If that’s not a little scary to you, then you’re either a liar, a corpse, or one of those kids who started karting at four years old.

We all need to accept the reality of what driving is, and the inherent, wonderful madness behind it. I personally know myself well enough to realize how easily I can be lured into false senses of security by modern cars and start driving like a moron; to combat this, my preferred daily drivers are ridiculous, primitive machines incapable of hiding the fact that they’re just metal boxes with lots of sharp, poke-y bits that are whizzing along far too quickly. Which, in the case of my cars, can mean speeds of, oh, 45 mph.

The point is, everyone on the road should be able to capably drive, in pretty much any conditions, without the aid of some AI. Even when we have more advanced automated driving systems, this should still be the case, at least for vehicles capable of being driven by a human. But for right now, systems like FSD are not the safety net: the safety net is always us. We’re always responsible when we’re in the driver’s seat, and if we forget that, we could end up in far worse situations than just embarrassing ourselves online.

But that can happen too, of course.

What a wuss. I bet that made him late for his perm appointment, too. You couldn’t pay me to own a car so beloved by such proudly helpless, willing slaves even without the clown in charge of the company. Just reading this coward’s whining made me recoil in disgust. Younger me would have never thought I’d say it (younger me didn’t quite realize that the Pendulum Effect worked with every aspect of humanity), but we need to bring back shame. How do people like this survive day-to-day? Who is feeding them and why? Some people have it far too easy and it’s entirely to their detriment.

Well, by publicizing them, Jason is feeding them more attention, for one thing.

There have always been, and always will be people like this. The internet age has just given them an easy mouthpiece. The important thing is to not engage them even more. Just ignore them and move on, the exact opposite of this entire article.

How does he cross the street by himself? Does his Mommy still hold his hand?

I’ve taken cross-country trips relying on nothing but road signs.

Anyone remember being given directions to someone’s house similar to “Make a right at the Seven-Eleven, and at the fourth stop sign make a left, then a right at the yellow house. Go two blocks and we’re in the green house with the white mailbox on the left.”

When I was stationed in Japan – I would drive all over the place, and I could not read 80% of the signs, and I rarely had a map – but I usually wound up where I wanted to be, or at least somewhere interesting!

(Tho my personal rule was to never drive down a one-way street – because if I did I would never be able to turn around and go back to where I came from, as one could never count on making 4 lefts and winding up where you were before.)

Jason, I know you’ve picked autonomous vehicles as the hill you’d like to die on, but you can’t let random Tesla stans get to you like this.

Now all you’ve done is drive more attention and traffic to this guy.

Yeah. I know Autopian strives for Educational and Entertaining (I think that’s it right?). But which one is this?

Just seems like pointing out someone stupid.

There were possible interesting directions to go with this. Comments have pointed out that FSD has only been in Australia for a few months. Is it legal there? What are the driving license requirements in Australia? In my US state, a permit requires 50 hours of driving at night, but I thought Australia, like Europe, was a bit more rigorous.

As is, this article is just falling victim to the algorithm.

There is some Education buried in the outrage. Jason rightly points this out, which isn’t necessarily obvious to everybody:

> I absolutely do know where to start: the idea that the “safety net” is Tesla’s FSD software. Because that is exactly the opposite of how Level 2 systems are designed to work! You, the human, are the safety net!

Jason does seem to be channeling Lewis Black a lot lately.

We got 5″ of snow here in western PA Saturday night, and on Sunday afternoon I drove my whole family to a museum in another town, which turned out to be closed due to weather (when we arrived, it was sunny & accumulation had ended 8-12 hours prior, it really wasn’t a big deal).

Anyway, my 17-yo said we may as well go find other things to do, so we ended up on a long drive through the beautiful countryside. The back roads weren’t perfectly clear, but it was sort of normal post-snow conditions. I never felt unsafe.

I did, however, get behind 2 different drivers who were evidently terrified: going 5 mph or less, clearly unable to assess conditions with any sense of reality. I don’t know if they were hobbled by time in self-drivers or what, but it was kind of shocking.

Later, closer to town, I saw a more typical array of drivers who were being IMO overcautious, but within normal range. But those two literally shouldn’t have licenses. Sheesh.

I remember driving my 2CV from Yorkshire to Dorset using a list of cities to aim for and a compass. It took all day, but then it only had 29bhp and was heading in to the wind.

It did get fully dark at one point, but there was this thing in the car to twist that made the dark go away from the front of the car.

I see you had sprung for the fully optioned-out model.

I’d like to subscribe to your newsletter. Wait… I already do.

Totally agreed and my 78 year old father in law is in the same group of people who really shouldn’t be driving if they can’t do so without electronic crutches.

Th is atrophying of skills is precisely why I refuse to use navigation systems. I will decide when and where to turn, dagnabit!

Eh, I can get myself around my city without it, but Google Maps routes me around traffic I wouldn’t have otherwise known about (accidents, congestion, etc). Most of the time I’m not actually paying attention to the turn-by-turn, I just can just look ahead and see “oh there’s an accident on my normal offramp, better take the other one”, etc.

Ehhh. Navigation systems are reliable and ubiquitous enough at this point that navigating by the stars and your uncle’s slurred “shortcuts” isn’t the best use of brain cells.

“Somebody once told me

The world was gonna roll me,

I ain’t the sharpest tool in the shed”

Good lord, *this* BS is why I hate the fact that this (so-called) “self-Driving” crap is allowed on public streets. I did not sign up to be a crash test dummy for Tesla, Waymo, et al.

It does remind me of a joke though. Why is a nice, sunny day with perfect weather the most dangerous time to drive? Because numbnuts like our friend here are on the roads.

I’m much more ok, at least conceptually, with Waymo than with these FSD types of things. With a completely self-driven vehicle, there’s none of this I’m-driving-I’m-not-driving-I’m-sort-of-driving crap going on. An FSD system relies on the driver to pay attention and drive well if necessary. Clearly, that’s not what some people want to do and/or don’t feel competent doing. I’d rather have them in a Waymo than doing a halfassed job of driving a partially automated Tesla.

And that joke is entirely on point!

After a Waymo ran someone down in Tempe a couple of years back I haven’t thought much of them, either. The accident happened in a spot I walk by quite frequently every year while on vacation so it stays fresh in my mind.

Lessons from American Eagle 4184? Basically plane flew on autopilot until it was unflyable, then gave up.

Air France 447 is in the same category. Sensors went bad, autopilot gave up and then the pilots misflew the completely flyable airplane into the ground.

Remember the good old days when you used to plan out a road trip. You would unfold the map on the kitchen table, carefully look at the available routes, add up the little red numbers between cities, and make a detailed notecard about where you needed to turn and the distance to the next turn. You would then properly fold the map and put back it in the glove box….If you hadn’t committed it to memory before leaving, the notecard was tucked up in the elastic band of the sun visor for easy reference. My wife say GPS has made me soft, but I can certainly still navigate by map and compass. Should be required part of drivers training, these kids (shakes fist at cloud…).

I’m pretty sure 7 year old me would be far better at getting around using an old map than these people are with all the software in the world…

I never went to that level, but even in the days of Mapquest printouts, I’d note what the street/exit past the one I wanted was so that I knew when I went to far and had to turn around.

Meh. By and large the good old days are just old and weren’t that good.

Yes. I haven’t driven a semi-automated car beyond radar cruise control, but my experience tells me this: the times when I have felt most uneasy driving (full snow whiteout on a white-covered highway in the daytime, snow + fog on a white-covered twisting mountain road at night) are the times when I absolutely wouldn’t trust an automated system to turn the wheel for me.

I remember my 2022 Acura TLX losing radar cruise control (and therefore all cruise control) due to just the right level of misty rain on I-45 between Dallas and Houston. I had no problem taking over, but it made me think how some of this tech really is worse than what we had before it. Old school cruise wouldn’t have cared at all.

Good point. Thankfully mine does both.

To paraphrase- Theres too many self indulgent wieners in this world, with too much bloody money!

So if this guy spent a week just hanging in his house, would he walk out the door and suddenly realize that it’s REALLY DANGEROUS to leave your house? You could have a tree branch fall and hit you. A car could jump the curb and run you over. You have to walk along sidewalks all by yourself and not fall off. And the list goes on.

I guess this attitude had to start at some point, but it seems awfully early. Those systems have only been available for a few years and now people feel unable to drive without them? Jeez….

EDIT: Just saw the comment below regarding their availability in Australia. Obviously, that makes this even more absurd.

Heck, even your house is dangerous. My stairs try to kill me on a semi regular basis.

Its worth noting that this guy is from Australia. FSD only launched here in September this year. So this guy has managed to completely forget how to drive in only 3 months. Impressive.

To be fair, everything in Australia is trying to kill you. At least, that’s what I’ve heard. Crocodiles, giant spiders, dingos stealing your babies… I’m amazed you folks ever leave the house.

“I have lost my fiancé. The poor baby.” “Maybe the dingo ate your baby.” “What?” “The DINGO ate your baby.”

Fully Self-Sufficient Dingo

FSD is just another thing to add to that list lol

Also worth noting that even though FSD is available here, it’s far from legal…

0 to 60 in a few seconds? what could possibly go wrong with this driver?

How good was this guy’s driving before he had FSD?

Wait, doesn’t FSD rely on camera sight to see where to drive?

Yep! And it’s infamously pretty bad with rain too!

As the enshitification of cars continues, I see the enshitification of drivers is not far behind.

They’ve been fully enshitified since GPS, or fuel injection, or cruise control, or electric starters. Dealer’s choice.

Naw. Everyone knows it was taking lead out of gas that spelled the death of the American Motorist.

Nah. It was clearly wheels going from stone to wood that softened the driving populace.

Mmm… a tank of tetraethyl and a can of 104 Octane Boost made my ’68 Coupe deVille run just right. Back when it was being phased out there was one local-ish station left that mixed up their own blend, had a single pump to dispense it, and I used to drive quite a ways out of my way to fill up there. They charged almost twice as much, but it was still cheaper than AvGas which was an even bigger pain in the ass to fill up on.

I thought that this had to be rage bait until I saw the dude’s profile. Nope, just another insufferable stan doing insufferable stan things.

chuckle chuckle -snort- chorckle

this is so funny, why am I not surprised, just amazed but also not

I’m still laughing

Acceptable kvetch: “Aw, shit. It’s dark and pouring and harsher than usual windy, and the GPS went out, and I don’t know exactly how to get where I’m going. How irritating.”

Unacceptable kvetch: every other fucking aspect of what this dumbass said.

I can’t imagine feeling safe with a computer driving for me. I drove in dangerous situations and I was afraid, like you should be in those situations, but I think I would be terrified with giving the control to a computer, even in a nice day on a straight road.

I have tried FSD a few times, it requires an exhausting level of hyper-vigilance beyond any situation when actually driving. You know it is going to screw up but you don’t know how bad it is going to screw up and that said screw up could occur at any given moment.

I think AV2 and AV3 is dangerous by their very existence.

Machines are very recent in our world. Before machines, we used animals. We grew to trust animals. There are lots of stories of people trusting a horse to get them home safely after getting hammered at an inn. My dad grew up with a mule that you could hook up to a plow, show here a field and the basic pattern of how to plow it and she would plow the field pretty much on her own. We are used to seeing animals learn from experience and adapt to what we want. I have a dog that sleeps in the bed with me every night that I wouldn’t trust to go 2 hours without making a mess back when we first got him.

Deep down in our lizard brains, we see machines sort of like animals. Most car people have named a car. We think it has feelings (this morning was cold and I felt sorry for my car having to start in that cold). We think they have personalities. Basically, sort of like how we consider a loyal dog or the like.

An AV2 or AV3 car can drive itself in most situations. It just needs a human to supervise and make sure it doesn’t do anything stupid and step in to help. I know a guy with a Telsa Y with FSD. He is convinced that the car is AV5 on the highway and the only reason it isn’t stated as such is because the lawyers don’t want to advertise something that is a 0.01% chance of a human being able to do it better.

The result is that even if a human is looking at the road and has their hands on the wheel and the like, their brain isn’t engaged. They aren’t scanning the roads for what might happen, because they trust the car (which is much smarter than it was originally after the updates) to do that.

Maybe they will start being super attentive, but after a month of flawless driving (because nothing happened), they start zoning out. Sort of like the time my dad zoned out and forgot to show the mule to not plow his mom’s flower garden.

He wouldn’t have to worry about getting lost if Tesla had carplay and android auto integration

So I was following a Tesla yesterday and either the driver was really out of it or the Tesla driving software sucks. As I followed I watch the car, multiple times, go over double yellow lines by a large amount, enough to cause traffic from the other direction to dodge the Tesla. I honked a couple of times, and it was obvious the driver took control as the Tesla sped up over the speed limit and appeared to stay in lane. I used to hate Priuses with a passion, Tesla now occupy that spot. Very unsafe cars out there.