Automated driving is not easy. At all. In fact, it’s pretty astounding that we’ve come as far as we have. The underlying tech behind all automated driving systems, even Level 2 supervised ones, is incredibly impressive. It’s the sort of thing that seemed nearly impossible until about 20 years ago, when Stanford University’s very modified VW Touareg won the Grand Challenge and drove 132 miles through the Mojave Desert all by itself, at a speed of around 19 mph. Now Waymo has fleets of robotaxis driving the busy streets of major cities every single day, and, for the most part, they do a pretty good job. Sure, there have been incidents and accidents, hints and allegations, but overall? It generally works. That’s part of why I’m so surprised about an incident with a Waymo passing a stopped school bus that has triggered an investigation by the National Highway Traffic Safety Administration (NHTSA).

Here’s what happened, based on video of the incident captured by a witness. On September 25 in Atlanta, this Waymo automated vehicle was seen ignoring the many flashing lights and pop-out stop sign of a school bus and passing it instead of stopping. This is, of course, a severe traffic infraction, with pretty harsh penalties in most states, because the reason a school bus stops and turns on that mini-carnival of lights is to ensure the safety of the kids entering or exiting the bus.

Those lights are so numerous and obvious because they mean kids are around, kids nobody wants to hit with a car. This is a big deal. Here’s the video of what happened:

Here’s a news report showing the same clip and including commentary from local officials:

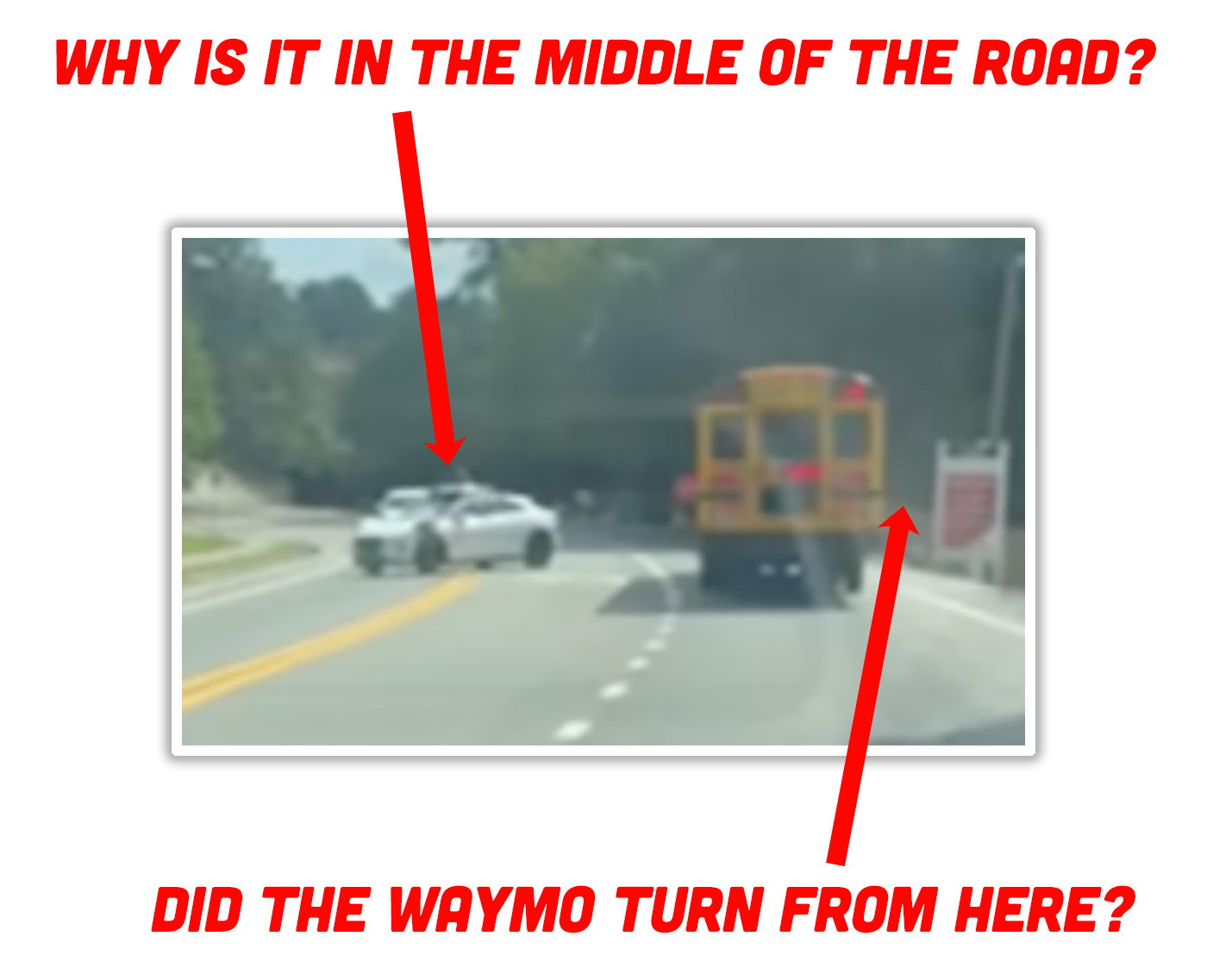

Let’s just look at what the Waymo did to try and make sense of it all; here are some stills from the video:

So, we see the Waymo’s starting position in front of the bus, sorta perpendicular, in the middle of the street, crossing the double yellow line. We’ll come back to that. You can see the bus had all of its warning lights blinking, the pop-out stop sign deployed, everything, and then we see the Waymo just cruising past it.

I suspect the Waymo must have made a turn in front of the bus to end up in the middle of the road like that? The video doesn’t include anything from before this point, so I can’t be certain, but I also can’t figure out how it would end up in this position otherwise. And, it hardly matters, because at this point the damage is already done: that turn should never have been executed in front of a stopped school bus with all its warning lights flashing.

The Waymo seems to be at least partially aware something is amiss, because it’s making that turn quite slowly, though it notably does not fully stop at any point. The narrator of the video states, “the Waymo just drove around the school bus,” which seems to support it made a turn in front of the bus, which is, in this case, quite illegal.

Now, here’s what I really don’t understand: how can this happen? Not “this” as in an AV making an error – we know there’s a myriad of ways that can happen– but this specifically, a school bus-related issue. I can’t think of an element that regularly appears on roads more predictable than a school bus. They have essentially the same overall look across the country – same general scale, same general markings, same general colors, same general shape, everything. They have the same, predictable behaviors, the same warning lights and signs, the same cautions need to be taken when around them, they tend to travel in predictable, regular routes at predictable regular speeds – every aspect of school buses should be ideal for automated driving.

Really, there’s almost no element of automated driving that is potentially more predictable and understandable than school bus identification and behavior. I know when you’re dealing with messy reality, nothing is easy, but in the spectrum of automated driving difficulty, school buses feel like an absolute gift. The Waymo should have seen and identified that school bus immediately, and that should have triggered some school bus-specific subroutine that told it to, you know, see if the flashing lights and stop sign are active, and if so, stop. And stay stopped until the bus’s lights are off and it’s safely away. This is one of those cases where a potential for false triggers is worth it, because the potential risks are so great.

Waymo did issue a statement about the incident, though it doesn’t really say all that much:

“The trust and safety of the communities we serve is our top priority. We continuously refine our system’s performance to navigate complex scenarios and are looking into this further.”

Waymo also told Reuters that they have

“…already developed and implemented improvements related to stopping for school buses and will land additional software updates in our next software release.”

Referring to the specific incident, Reuters also reported that Waymo noted the robotaxi

“…approached the school bus from an angle where the flashing lights and stop sign were not visible and drove slowly around the front of the bus before driving past it, keeping a safe distance from children.”

While I have no doubt this is possible, it does open up a lot of questions. Did the Waymo vehicle identify the school bus for what it was at all? If there are potential angles where a school bus’ warning lights can’t be readily seen, should there be other methods considered? Audio tones, or even some sort of short-range RF type of warning specific to automated vehicles to help them identify when a school bus may have children entering or exiting? Again, school buses should be some of the most obvious and understandable regularly encountered vehicles on public roads; there’s no excuse to not be able to deal with them in a robust and predictable manner.

I’m sure Waymo is happy to have NTSA joining them in this investigation, right?

Cause it’s a long, hard road

That leads to a brighter day, hey

Don’t let your batteries grow cold

Just reach out and call His name, His name

Waymo B there

Aren’t automated systems known to slam into emergency vehicles that are stopped with their lights on? Is there something about flashing lights? Are self-driving systems like the lady from The Andromeda Strain?

That’s Tesla’s useless camera only system. There’s a reason Waymos are covered in LiDAR sensors, they might still have image recognition problems, but they can’t completely not notice stationary objects.

How does this happen? Easy: every single person developing this tech is a childless 23yo male, and anyone who’s not that can’t get their ideas past the PM, who is. Of course they didn’t prioritize school busses.

Those stupid robot vehicles! So dangerous!

A HUMAN driver would NEVER ignore a school bus with it’s lights flashing and its sign out…

Oh, wait:

https://wnyt.com/top-stories/over-500-warnings-given-for-passing-stopped-school-buses-in-albany/

“WEST SENECA, N.Y. — Operation Safe Stop, a yearly reminder to stop when you see a school bus with its reds flashing, continues to be an important safety message because an estimated 50,000 drivers illegally pass stopped school buses every day in New York, according to Gov. Kathy Hochul’s office.”

https://spectrumlocalnews.com/nys/central-ny/CTV/2025/10/20/operation-safe-stop-2025

“The National Association of State Directors of Pupil Transportation Services (NASDPTS) reports that drivers illegally pass stopped school buses more than 39 million times each year.”

https://ncsrsafety.org/national-school-bus-safety-week-2025/

Robots shouldn’t have to be perfect, just better than the meat bags they replace.

The meat bags all have individual programming. The Waymos all have the same programming. That’s why they have to be held to a higher standard. Cause if one has a problem, they all potentially have that problem.

Or, in short, gaps in programming can cause WAYMO problems than individual dumbfuckery.

Not true. All meat bags are taught the same rules and held to the same standards.

“Cause if one has a problem, they all potentially have that problem.”

That is true of meat bags too. One finds a loophole in the law whether real or imagined and more follow. Just look at how many people believe speeding or running red lights when nobody else is visible is acceptable. Humans also make terrible judgements. When was the last time you saw a robot ignore multiple warnings and get stuck on 11’8 bridgecam?

The difference is the robots record metrics, the programmers can address the unique situation and a fix can be applied to the whole fleet instantaneously.

Ah yes, like the AWS outage that was fixed instantly. People are always gonna people, they’re never going to act the same.

Conformity, on the scale we’re proposing with autonomous driving, has the potential to MASSIVELY fuck up.

Even if the best case failure mode is they all immediately stop moving, now you’ve created instant city-wide roadblocks.

Worst case is they DON’T stop moving and collectively do unpredictable things while moving.

Sure if you take down the network. Its no different for meat bags Turn off the traffic signals in any metro area and see what happens. Utter chaos.

I’ve pointed this out before bit it seems I need to again, the robots are held to an unrealistic expectation while humans get a pass for EXACTLY the same scenario. For example, remember a few years ago when an AV dragged a woman what, 20 feet? OMG How DARE that happen!

Those same critics never mention the only reason that woman ended up under the AV was BECAUSE SHE WAS KNOCKED INTO IT BY A MEATBAG IN AN ALTIMA. The meat bag knew exactly what they did and drove off anyway. They are still at large. I dunno if anyone is even looking for them.

Meanwhile the AV, having no way to know that woman was underneath it (nor would have a human driver) realized SOMETHING was wrong and pulled over in 20 feet out of the flow of traffic which is the minimum possible. Despite doing everything correctly its the robot that gets all the blame while the meat bag that caused the whole thing and did everything exactly wrong is ignored.

If the network goes down, an OTA update was wrong (cause tech companies NEVER push out faulty update packs), or a situation happens that hasn’t been tested for, there’s tons of reasons for it to go awry.

Meat bags, for better or worse, have decision making capabilities. They are flawed.

Unfortunately, meat bags designed the AVs and their software, so we’ve added the same flaw, just further up the chain. Despite all of AI’s best efforts to appear as consciousness, they’re just glorified if/then machines that can’t process new information without being told how to do so.

This is a prime example of how you can’t program your way out of a world that is coming up with infinite scenarios.

This is also why I have an issue with AVs as personal ownership. We all know how people tend to maintain vehicles they own. Poorly. Especially if it becomes an appliance that just brings you places.

An AV doesn’t know it has worn tires. An AV doesn’t know that it has deteriorated brake lines. It doesn’t know anything about itself outside of sensors and pre-programmed logic.

Sure, you could monitor everything with sensors. Then the car can spend the majority of it’s time not moving because sensor “X” is dead. If you deem it can run without those sensors, then we’re back to “it has no idea what condition it’s in”.

I’ve spent two careers. One in IT and one in heavy equipment repair, focusing on how machines fail, not how they work. Cause that’s the important part.

yes, and the software patch they made to correct this problem will keep every one of their cars from having this same problem again.

I ride an e-bike around downtown LA all the time and Waymos tend to give me more space than vehicles piloted by meat bags who are looking at their phone screens instead of the road.

Yeah, I don’t want Waymos going around my young daughter’s bus, but luckily (I guess?) I live in a crappy town that has no Waymos. Long time lurker (since barely after the site launched when I realized this is where Torch, DT, and Mercedes went), first time commenter, but I’m saving up for a membership.

I bet the Waymo mistakenly got the Mad Max OTA update.

They will forever but implementing “updates” to cover different driving situations and unique scenarios that a human being could creatively navigate on the spot, in the moment. Self driving tech will forever be behind the curve “reacting” after the fact, always a beat behind, forever.

Terms like AI, “artificial “intelligence”, are used to obfuscate and mislead people into believing that these systems can problem solve, and they can’t. There is no “thinking”, artificial or otherwise.

People aren’t perfect drivers either, but I’d argue that a machine needs to be an order of magnitude better than any human, the best human driver, not just marginally better than the “average” driver.

And, there needs to be a rigid test that a self driving car needs to pass, not controlled by the people writing the software or designing the sensors. Minimum standards of performance. They can’t just get lucky.

I’ll bite.

Human drivers?

There’s Waymo kids out there than there should be.

Rebrand to Thaynos coming soon?

Snap! What an idea!

I wonder if whatever equipment or spectrum the Waymo operates or sees the bus as it certainly isn’t seeing a yellow bus with lights probably a blip or shape like sonar and the lights are on a color spectrum. Not that this is an excuse and they should be taken off the road until it can at least be explained.

I’m very glad they’re not a thing in the UK. I would absolutely neverget in one. Plus, I think we’d be even more horrible to them than Americans are.

We talking school buses, or flashing annoying lights, or something else here?

Waymos. Tho we don’t really have school buses much, either now.

Well…

“Driverless cars are coming to the UK – but the road to autonomy has bumps ahead”

https://www.theguardian.com/news/ng-interactive/2025/oct/18/driverless-cars-uk-autonomy-waymo-london

Well, fuck.

Buses are not all similar colour, they are exactly the same colour:

Federal Standard 595-13415 aka “school bus yellow”.

Applied to us here in Canada as well.

They may start out the same color, but here in Florida in the sun they don’t stay the same color very long. They fade to a wide variety of variations of “School Bus Yellow”. And only PUBLIC school buses are required to be yellow anyway. Private school buses can be any color the school wants them to be. There are church school buses around here that are white that pickup and drop off kids just the same.

But could be worse, Teslas seemed to have a habit of aiming for emergency vehicles. Probably fixed by now. Maybe.

The way Waymo is screwing up its like a real life version of “Maximum Overdrive”

or “Terminator 3:Rise of the Machines”!

Did someone ask about rants?

This illustrates the problem with technology. Technology I trust. Corporations I do not and this is a perfect example. First thing is to recognize a car is both a vehicle and a weapon. As such, it needs to be highly supervised. Traditionally, this is by a human driver who is, in principle, accountable. A suite of self driving car software is effectively one driver who drives multiple cars at once. So the software should be treated as if perfection is required not just desired. In lieu of a human driver, the senior executives of car self driving car companies need to be held fully accountable for the actions of their product. We have seen over and over again that if there is no ” skin in the game” then executives will hide behind a corporation and get away with as much as they can. In this case Waymo seems to have a very faulty system for what is arguably the single most important feature of a self driving car: Not killing children. If I let my 12 year old drive and they did this I would face harsh penalties. Second thing: When they first started talking about self driving cars on public roads years ago, the first two thirds of the articles were focused on how bad human drivers are. Everyone knew that already, but the obvious intent was to lower the bar for what should be expected of self driving. In other words, they were implying it is ok to injure people thorugh trial and error in the name of progress. If they had cared about the safety benefits they touted, they would have made a consortium that acted like the FAA used to act: Focus on safety, all crashes investigated and results shared across the industry, strict requirements for certification. Then they should have proceeded to develop their self driving around those regulations and requirements. With something as dangerous as a car and no codified safety requirements, they are programming cars to injure or kill people just as much they are to transport people. As it is, these automotive autonomous software makers are effectively rogue states experimenting on the local populous.

This perfectly summarizes my feelings on too many things in the modern world. In addition to all of your excellent points, I won’t be trusting any self driving system until the automaker is willing to take 100% liability for an at-fault accident while in use. This isn’t out of fear of the technology, I’m actually still in favor of the development of self driving systems because I agree that the “average driver” is terrifying in their disregard for others. My problem is the current systems make that even worse since the level of supervision required of the operator is actually MORE mentally taxing than actually driving.

I equate driving vs. supervising self-driving to the difference between doing a task and doing an over-the-shoulder evaluation on someone doing that same task. The person performing a task knows what action they are about to do and unconsciously applies an appropriate amount of focus based on it’s perceived difficulty, the evaluator has no idea exactly what the subject is about to do and has to be alert at all times to the potential for unsafe or incorrect actions which is exponentially more mentally taxing.

Maybe this is why we all shouldnt be subject to them beta testing these things on public roads.

When I start my own FSD company (maybe tomorrow, I don’t feel like it right now), we’re starting with recognizing school busses and the rules and regulations surrounding them, then we’re moving on to other things.

And this, ladies and gentlemen, is Exhibit #37,494 why self-driving cars are a fantastically stupid idea

You can correct the AI that does this, but can you correct the human that does this?

Yeah, enforcement and fines. Here in Tucson it’s sheer mayhem on the roads 24/7 but the second the ’15 MPH ENFORCED’ temporary school zone signs come up everyone suddenly does exactly 15 mph.

I’d never seen anything like it, so I asked my Tucsonan friend why people actually obey those signs so rigorously. She told me the fine for ignoring them was significant enough to get most peoples’ attention.

Your key word there is most. Can it be all?

I only put it as a caveat that I’m sure someone somewhere is ignoring those signs, but I’ve literally never seen it with my own two eyes. It’s always down to an absolute crawl when those signs come out, and I’ve never seen Tucson drivers so well-coordinated at anything else.

Plus, it could be your kids on the bus. It’s not gonna be the robot’s kids on the bus, is it?

It’ll be the robot’s dad driving the bus.

Godammit, it probably is, yes.

If you make the penalty death for speeding in a school zone. Then it could be all!

Right, like all the crime with a death penalty has dropped to zero.

If they did it “right” then yes then it would drop to zero, because everyone that would speed in a school zone would be dead.

This is all hypothetical of course.

Can the machine be made to be 100% correct?

No. Neither can the human, so that’s not a realistic bar.

Can it be made better than a human?

Maybe. It’ll be a long time before we get there, and as a society I don’t think we should allow them to learn from their mistakes made on public roads where humans are involved.

My county, and Florida in general, have gotten REALLY serious about school zone and bus violations after a spate of kids getting killed the past few years. The county is installing outside cameras on every single bus, and they are prosecuting every violation. As they should. There are Deputies at nearly every school every day too.

I would be absolutely 100% in favor of strictly set speed cameras in every single school zone. There is just no excuse at all for either bad behavior, and the speed restriction is for all of a 1/2 mile a few hours a day.

Late 80s on a Grand Canyon road trip my mom got pulled over and ticketed for speeding in a school zone somewhere in AZ. It was early August and no students anywhere to be found but the local cops were still using the school zone as a speed trap. Mom was pissed. The only ticket she ever got. She was an elementary school teacher, too.

Are you okay allowing a machine to be driven recklessly on automatic just because we have humans driving unsafely?

We were just in San Francisco and these things were EVERYWHERE. The locals seem to have accepted and gotten used to them, but I basically stopped in my tracks to watch them until they’d trundled by and I’d satisfied myself that there was no way they could kill me. For what it’s worth, and not that it’s an excuse, but their default speed seems to be “we could have walked here sooner,” but that’s probably just because were in densely-populated tourist areas.

I have yet to see one here in PHL, but I heard they were doing a trial.

“…approached the school bus from an angle where the flashing lights and stop sign were not visible and drove slowly around the front of the bus before driving past it, keeping a safe distance from children.”

OK, I get that maybe the stop sign on the driver’s side may not be visible, but the big yellow bus with flashing red lights on the front right above the windshield should be plainly visible to the Waymo. Fuck the Waymo spokesturd for such an egregious deflection of the fact that their taxi failed such a simple, obvious condition.

And that’s not even considering that it cut in front of the vehicles on the same road as the bus approaching from the opposite direction that properly stopped for the bus. It does not look like they have a stop sign, so the Waymo should have been waiting for them to pass.

Right, I want to emphasize that this is total and utter bullshit. The angle of the Waymo up to the flashing red lights on the front of the bus it passed is about the same angle as from the car to a stop light. Meaning, the cameras absolutely can and should be able to see the lights. This is absolutely just a case that was not adequately programmed in. Even more obvious because it wasn’t a protected turn, so the Waymo’s logic almost certainly said “Car on left stopped, no car on right, cross the road and proceed” Neglecting the fact that the bus had the right of way, and stopped to let out children, and it did so with a dozen flashing lights.

If I ever get a job writing code for self-driving cars, the code would only have two lines:

IF(caught_breaking_traffic_law)

RUN(dead_men_tell_no_tales.exe)

I assume the default setting for any self driving BMW is to blast past any school bus?

Torch, I just wanted to say that I understood your Paul Simon reference, thank you for the whimsy.

What exactly is the law on the matter? Is traffic coming the other way also required to stop? I note there are two solid yellow lines. Some states define that no different than a divided highway, some require two sets of double yellow lines for that designation. The Waymo crossing in front of the bus is another issue, as well as the brain fart it appears to suffer when it stops perpendicular across the road.

According to Alabama laws, traffic in all lanes, two or four lanes or divided roadways, must stop for school buses when the lights are flashing. It’s clearly stated on the the back door of every bus. That goes for inner-city neighborhoods and rural areas, especially where children have to cross the roads to get to the other sies. Even occupied self-driving cars are subject to this rule. Violate this rule if you dare, and every cop, judge, and district attorney will have a field day embarrassing you. You may even get you name and photo featured on the front page of the local community paper.

I used to work in the education field, and I fully support this law.

> especially where children have to cross the roads to get to the other sies

TIL human kids are basically chickens.

Imagine a 35 lb chicken with opposable thumbs running around your house, and you’ve got it

Do you chase it with a cleaver and a fake Swedish accent?

In the fiefdom known as Michigan there has to be a physical barrier in order for vehicles on the opposite side to keep driving. Otherwise you have to stop. The busses won’t pick up or drop off passengers in a way that requires crossing the road, but since kids are kids the State doesn’t consider paint lines as adequate.

Now that I think about it, I can’t recall ever seeing a school bus picking up or dropping off kids on a road divided by a barrier. If anything they will turn on to a side street provided the grid gives them a way to get back out easily.

It must happen in New Jersey where every road is a divided highway lol

For Ohio it’s fewer than 4 lanes, you have to stop. 4 lanes or divided, you don’t have to.

So Springboro Rd in my neighborhood with 4 lanes and a chicken lane, you don’t have to. Yet I see people almost cause accidents stopping for a bus on the other side.

I’m also in Ohio and not a single person is aware of how it works here. People stop on the opposite side of a 4 lane road for school busses literally every time I’m out. I guess it’s better that they’re erring on the side of caution, but it’s frustrating when you’re in a hurry stopped behind two people who don’t have to. People have also gotten insanely angry with me when they’re stopped in the opposite side of a 4 lane road for a school bus and I just drive around them.

As one who drives all over the country for work, and I was in Columbus OH last week, if there is a bus with it’s lights flashing, I am stopping regardless. If I inconvenience a local because that road happens to meet a weird exception to the general rule, too damned bad. I’m not chancing not knowing the local peculiarities.

If you’re not part of the solution, you’re part of the problem.

Springboro Road? Oh hai, Nasti Natti dweller 😛 I’ve driven that road when I lived there in late ’22-mid ’23 and yeah, seen that exact scenario. That road really just needs to be divided properly but that’s a whole nother can of worms.

Needs to be at least a 5 foot median to not have to stop in Florida.

Source: Cool guy that got 100% on his written test after flipping through the book once.

In most states, yes, opposing traffic needs to stop, unless its a divided highway with either a median strip or barrier separating the directions, but some states make exceptions for mutlilane highways without medians, and some go stricter and require a stop even with divided traffic

Like most everything in the US, it varies by jurisdiction. Which is part of the problem – people don’t necessarily live where they got their license. If they even paid much attention in driver’s ed to start with. And when you get re-licensed in a new state you generally don’t need to pass any sort of test, you just prove you’re you and they give you the new one. I actually did read up on Florida traffic law when I moved from Maine, and there are some pretty big differences. And of course, traffic laws also change over time – I got my license 39 years ago – a number of things have changed in Maine since I passed my test. I only know about them because I am a big dork with a rusty law degree who occasionally reads up on such things when bored.

IMHO, for this there should be a national standard, and it should be that unless there is an unclimbable barrier or a WIDE median (like wide enough you can’t even see the other roadway, all traffic in both directions should STOP, regardless of number of lanes. Children do goofy things like run out across roads even when they aren’t supposed to.

I was expecting more of a (justifiable) full blown rant from you.

So much over promising/under delivering for all these public beta testing technoturds. Trust us! We’re super smart! Got it totally covered! Constantly sending updates in no way indicates release was premature!

To solve this problem, fire all the bus drivers and install Waymo robots in every school bus.

If the guinea pigs (our children) survive, then we can do the same with transit busses.

Yep. Seems to be the Current motto

Double Down until the lawsuits drown

My district would love that, they already underpay the bus drivers and then wonder why there is a shortage

(also have to deal with kids).

They would jump all over autonomous busses like a dog on bacon.