It was over two years ago that I first wrote about the emergence of the generative image AI Midjourney as it applied to the car design process. My thoughts back then were it was nothing more than a picture slop machine for the lazy, cheap and talentless. And so it has proved. I’ve been blocked by more than one automotive company on social media for calling out their use of AI to create poor images when they would have been better served by commissioning an actual human to do real, paid, creative work.

I want to state for the record up front that I’m not a luddite. I’ll be 52 in a couple of months so clearly remember life before a computer in every home and the invention of the internet. I’ve witnessed mobile phones progressing from a bonce frying handset attached to a car battery that goes flat in two hours to a slim metal and glass rectangle that is now an indispensable part of our lives. I buy nearly all my music digitally because if I didn’t I’d be living in a house made entirely of CD jewel cases. Technology in vehicles has given us better economy, less emissions, more power, fewer breakdowns and safer structures. I’m onboard with these quality of life improvements.

But technology and the investor bros wait for no one as they get out of bed at 4am to begin their day by writing bullshit self-affirmations in an expensive notebook and dunking their inhuman faces into a bowl of ice water. Then it’s on to enriching their lives and enshittifying ours by forcing AI into every aspect of the world around us. I can’t even open a PDF in Acrobat now without an ‘AI Assistant’ icon popping up in the corner asking me if I want it to summarize the document for me. No fucking thank you, because I have a somewhat functioning brain that has learnt to read and comprehend. Did we really kill Clippy for him to return Terminator like, stronger and smarter, twenty years later?

AI Whack-A-Mole

Despite having Siri turned off as far as possible on my iPhone it still popped up uninvited a couple of times on a road trip last week. Why do I have Siri turned off? Because every time I’ve tried to use it for voice control of my phone while driving it’s been useless to the point of being extremely annoying – unable to do something as simple as playing the correct music track. To avenge Clippy, Microsoft now forces users of Windows 11 to have its AI assistant Co-Pilot on their machines. And because of the way Windows structures its updates to be mandatory there’s nothing you or I can do about it. Everything is AI this, AI that – are we now so lazy as a species that thinking and understanding are too much effort? I feel like I’m playing AI whack-a-mole.

My slightly more serious thoughts two years ago about how AI might end up infecting the car design process is that like life, technology would find a way and it wouldn’t be what we were expecting. An implementation I considered was something like a cloud based tool for solving 3D modeling patch layout problems, which could then be rebuilt by a human CAD jockey. What we’re getting currently and is being heavily promoted across car design social media is AI technology to turn your quick sketches into full renders. Creating fully detailed and colorized images from your linework using visual and word based prompting and then using those for further design iterations. But before I get into examining how these new tools work and whether they are any use or not, I want to explain how designers currently operate, so you can understand exactly what AI might contribute to the process – or if you want to sound like a professional designer – their workflow.

What normally happens at the start of a new project is a manager will gather all the keen young (and one older and cantankerous black clad one) designers together and tell them what they want. It might just be a facelift, the next version of a current car or something completely new. For a car that’s currently in production this will take place about a year or so after launch as it gives the marketing department time to solicit feedback from customers (and crucially non-customers). Does the next version need to be more aggressive? Sportier? Does it need to tie into the rest of the range more closely? Is it too close to another car and needs more visual separation? It’s not meant to be too prescriptive, so the designers still have a lot of freedom to implement their own thoughts and ideas. There will be a deadline, say the following week when the designers will be expected to present their work and argue convincingly for their design in front of the chief.

Why Photoshop Is Such An Important Tool

Some designers like to sketch straight into Photoshop using a Wacom graphic tablet. My preference was to always start on paper using just the trusty Bic Crystal ballpoint. Working with a pen and paper forces you to commit and keeps things nice and simple when you are just trying to get ideas out. I did this so I wouldn’t be tempted to start fancying up my sketches too much at this early stage. It’s too easy when using Photoshop to delete things so you end up redoing stuff over and over until you’re completely satisfied. Then after a few days when I had something I liked I would scan them into Photoshop to be worked up into full color renders complete with details like wheels, light graphics, trim pieces and so on that would be suitable for presentation.

The benefit of working this way is that you don’t waste time on something you are not totally committed to. An analogy I like to use is you wouldn’t write an essay or a short story without a few notes to guide you. Movie directors use storyboards to figure out shots and story beats before hitting the big red record button on the camera. It doesn’t matter if you are doing quick line sketches on paper or in Photoshop, keeping it simple allows you to generate loads of ideas so you can understand what works and fits the brief. What’s more, simple sketches can be incredibly expressive on their own. Massimo (Frascella) was very keen on seeing our initial loose, scrappy ten minute thumbnails because he wanted to see our ‘working out’. It’s always possible the chief will like something the designer themselves doesn’t. This is another reason I always encourage my students to have lots of nice sketches in the portfolios – not just splashy full color Photoshop renders, because it lets me see their thinking and how they arrived at their preferred design.

If you are not familiar with Photoshop, put simply it uses a system of layers. Everything on a layer is editable without it affecting the layers above or below it unless you tell it to. What this means is you start off with the scan of your ballpoint sketch and then build a complete image using layers on top of this. I usually block out the main parts of a car using color first – dark gray for the wheels, body color for the body, a lighter gray for the glazing, darker for the interior and so on. Once this is done I group those layers into a folder and create a new group for the core shading – that is very simple lighter and darker areas that define the shape of the car. I repeat this with another group for the shadows and highlights, and then a final group for the details: light graphics, wheels (which I usually just grab from stock), sidewall graphics for the tires, badges and logos and so on. Finally I create a group of layers at the bottom of the stack for the background. This is a very simplified description but it gives you an idea. A basic render might end up with 20-25 layers. An involved and complicated one with lots of highlights and shadows might run to 50 or 60. But importantly all the layers remain editable. I once did a front graphic that Massimo really liked – he asked me to do another ten or so versions of it, which was an afternoon’s work to churn out ten identical versions of my design with different front ends. Pay attention to all of this concept of editable layers and how renders are created because it’s one of the areas that AI render generation is lacking.

It’s important to understand the methodology here is the same as it has always been since Harley Earl set up GM’s ‘Art & Color’ section in the twenties. Only the tools that have changed. With pencil, pastel and paper sketches you would draw ten different front ends, cut them out and then mount them over the original complete image. Working digitally is more flexible, quicker and cleaner because you’re not faffing about with scalpels and spray mount. Now you have a basic grasp of how the sketching and rendering part of the design process works, let’s dive into the murky world of AI render generation and try to understand how designers might benefit from it.

What Vizcom Is And How It Works

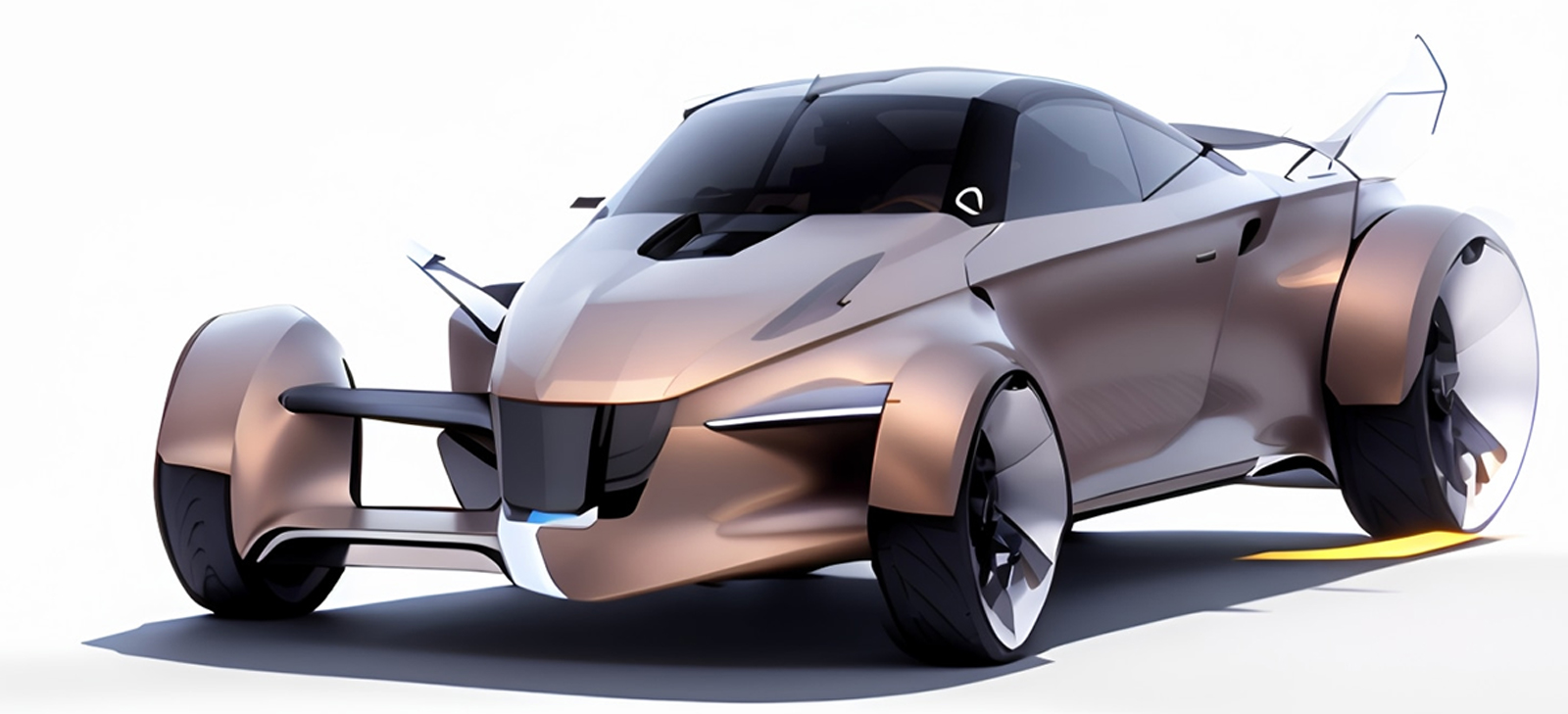

The most prominent of the current AI design tools is Vizcom (from Viscom, short for VISual COMmunication). Vizcom is a web based AI tool that based on the pitch, can instantly turn your quick sketch into a fully realized, professional standard render, without having to grapple with all that tedious Photoshop work. I’ll get into the background of the company itself in a bit, but it’s enough to know that this is one of the main angel investor backed AI start ups looking to establish itself in the industrial design field. It pops up a lot on my Instagram feed. Freelance car designer and online tutor Berk Kaplan promotes it on his YouTube channel, and Vizcom have also hired well known concept artist and car designer Scott Robertson to feature in their videos. I signed up for the free feature limited version, and like the previous article I’ll use my EV Hot Rod project as a baseline because I knew what I wanted when I designed that car, so it will provide a useful basis for comparison. Below is the sketch I uploaded into Vizcom.

You start off by uploading your sketch into a sort of simplistic Photoshop type webpage, although there are basic pen tools if you want to draw straight into the software. There’s a layers panel on the left, some editing tools at the top and on the right are the settings for image generation. By altering the generation settings, you can quickly produce rendered images of your initial sketch and add them to the layers panel. What happens is once you have a selection of rendered iterations on separate layers, you then output them to the project whiteboard which Vizcom calls the workbench. The idea is they are all in one place for refinement and collaboration. This, as I frustratingly discovered is fucking stupid. The problem is there is no way to go back to the original image generation editor you just left, with all your images on their individual layers. You can only double click on each individual image to edit or refine them separately. There’s no way to easily compare your sketch with the AI renders by simply turning the layers on and off. Once you leave that first screen, that ability is lost. Let’s try again.

The Results

This time I uploaded my pen sketch into the workbench, double clicked it to enter the editor and typed ‘matt black hot rod’ into the prompt box. Underneath this, you have three further option boxes. The first is for palette and sets the style of render you want. There are a few options here for different looks to your generated images: you can go for something more realistic, a flat cel-shaded look or more importantly for our purposes specific automotive exterior and interior rendering styles. There’s a slider to tell Vizcom how much the chosen style should influence the final image – I left it at 100%.

Here’s the result of using the Vizcom General shader. As you can see it has stuck closly to my sketch in terms of coloring in inside the lines, but everything else is shit. Both sides of the front suspension are different, the lower right hand side wishbone appears to contain a headlight, the wheels are shit, and it’s grown a television antenna from the rear fender. And it has totally ignored my prompt.

I tried again, using the automotive exterior shader. Vizcom has interpreted the linework better, but it thinks the tires are part of the bodywork as it has rendered them in the same way, instead of black.

This is using the Cyber Cel flat shader, a style meant to replicate high contrast concept art with simple color changes to illustrate lighter and darker areas. We have half a windsheild, odd orange pieces added at random and the roof release handles in turquoise. Not what I would have chosen. At least it’s paying attention to my text prompt this time.

This was created using no verbal prompts and the Volume Render shader. My hot rod appears to have gained organic components I didn’t know about.

This time I set the shader to Realistic Product. This is probably the best result I got, but it still needs a lot of work. Again the suspension arms are different on each side, the wheels are far too bitty and the rear left hand suspension arm is now invisible.

At the bottom there’s a drawing influence slider – how much should Vizcom stick to your original drawing. If you set it too 100% it should follow your line work as faithfully as possible. The lower you set the influence, the more freedom Vizcom has to go outside your sketch and do its own thing. So I turned it down to 0% to see what happened.

Well that’s not really a hot rod at all.

What this shows is when Vizcom doens’t have to follow your drawing, it can create that is much more convincing as a car. That’s not surprising because it’s trained on images of cars. But when it has to render something original that is outside its training data set it struggles to make it realistic.

These are pretty much all unusable garbage as you can see. But there is also the option to add a reference image. I can’t show the images I used for copyright reasons (because I just grabbed them off the internet as a student and we’re slightly more law-abiding than that here) but one was a black and white image of an old Ford and one was a monotone faceted sculpture of a hot rod. Here’s what came out.

Absolute visual bobbins.

Here is one of my original renders that I did in Photoshop at the time and yes I know it’s a slightly different view but that’s all I could find seeing it was done over a decade and two laptops ago. The key to using any tool effectively is proficiency and understanding how to get the best from it. However even in one of the videos I watched Vizcom was rendering tires in painted metal, consistently couldn’t get wheels right and gave bodywork all sorts of weird non-symmetrical openings. I spent most of Wednesday getting to grips with Vizcom and it does have simple tools that allow you to isolate and re-generate parts of an image. So with a bit of time and effort it would be possible to get slightly better results than I managed in service of writing this piece. But nothing I got was remotely usable – they all need extensive editing. And remember when I described how I used Photoshop by building up an image in lots of layers? It gets a lot more difficult when you are trying to edit an image with everything flattened into one layer. Vizcom tutorial videos I watched for research specifically mentioned doing this as part of using it effectively, so at which point we must ask ourselves what is the point?

Trying To Shortcut The Hard Work Is A Fool’s Errand

I understand the temptation to offload the creation of renders to AI. When I was studying, rendering was one of the hardest things I had to learn. I bought multiple books and video tutorials including some by the previously mentioned Scott Robertson. As my own techniques improved I always thought he overly complicated things and found his methods too prescriptive, but the reason it’s so difficult is because cars are extremely complicated shapes with a lot of individual elements and materials. It took me years of practice to be able to replicate the paintwork of a car with realistic reflections, highlights and shadows. Understanding how light affects matt and gloss surfaces and to give the impression of transparent surfaces.

The Photoshop keyboard shortcuts are now hardwired into my brain to such an extent that using different image software like Sketchbook on my iPad creates a dissonance in my head. I don’t often have the time or need to do Photoshop renders currently so I’m a bit rusty but when I was doing them regularly of a car I was familiar with like the Defender, I could knock out a compelling image in probably three or four hours. Which to reiterate what I said at the top of the piece, is why you would only do them when you had a design you were happy with. For figuring out your design they are simply too time consuming.

Midjourney, the image generator I played around with two years ago worked off a Discord server (like the one our lovely members have access to so they can chat to us. So cough up). You gave an AI chatbot some typed prompts, and a few seconds later it would give you back a few images. The results were less than impressive and very unpredictable. This is how I described it in that original piece:

“But it’s a scattergun approach—you never know what you’re going to get. In a worst-case “let’s sack all the designers” scenario you could imagine the correct prompts could become a closely guarded corporate secret like the formula to Coca-Cola, but it’s not likely because the results are too variable, too subject to the unseen whims of lines of code masquerading as something it isn’t.”

The problem with Vizcom and the new generation of AI image creations tools are no different. They’ve been given a more usable interface and because it bases its images on one of your own sketches you have more control over the starting point, but the dice-roll of what you get out remains. I learnt to render by studying other people’s work and attempting to copy it, so I could understand the techniques they used. Once I knew what I was doing I could use that knowledge of brushes, vector masks, adjustment layers and other Photoshop tools to apply to my own images with a personal style naturally growing out of that learning process. If on a basic level you do not understand how to create realistic images for presentation, how are you going to know if Vizcom has produced something correct or not?

It’s Not Just About Making Nice Pictures

Critics or tech bros will say you do not need to understand something to know if it’s good or not – the old you don’t need to know how to make a film or write a piece of music to appreciate it argument. You don’t need an in-depth knowledge of arcane construction codes to enjoy a particularly good building. The flaw in this logic in these examples is you are consuming not creating. The purpose of nice rendered images is to sell your design to your manager and to give CAD modelers a reference to build a 3D version of your idea. If you don’t understand how surfaces interact with each other create the shape of your design how the hell are you going to sit next to an Alias or clay modeler and explain to them what you want? This is the issue with Vizcom. It doesn’t understand because it doesn’t have the ability to.

Imagine if you had a robot butler. You’d ask it to make you pasta sauce for dinner. It would go and read a million different recipes, collect loads of different ingredients and throw them all in a big pot. The resulting reddish mess would resemble pasta sauce, but the robot is not capable of realizing that is only one part of making a tasty dinner. Preparation, selection of good ingredients, building up slowly from a tomato base and then refining through constant tasting are alien concepts that are more important than just chucking shit in a pan and hoping for the best. You can’t easily separate the robot pasta slop back to its constituent ingredients to fix it either. Yet this is how AI image generation currently works. All it does is scrape millions of images of cars and text data pairs and slop the average over the bones of your line work. You’ve got try and unpick and edit the result to correct the faults inherent in such a simplistic approach.

Unfortunate but Vizcom is built off Stable Diffusion, as stated by Vizcom CEO in public discord (dev team in blue). Vizcom claims to not allow use of artists names (unsure how realistic it is). Yet this is easily bypassed as models are still built and rely on artist’s work. ???? pic.twitter.com/AX8vTLaZ5S

— Karla Ortiz (@kortizart) March 2, 2024

So while Vizcom is being sold and promoted as a time saving tool, I’m not convinced it’s anything more than the latest Silicon Valley tech fad. The CEO ironically had a past career as a car designer. Now as a co-founder there’s been the usual Forbes puff piece detailing the rise from eating Costco hot dogs to funding rounds and a Mountain View headquarters. According to the linked Forbes piece Enterprise users of Vizcom can use their own proprietary image sets but more problematically Vizcom is trained and built on Stable Diffusion, an open source deep learning text to image AI that was itself trained on the LAION-5B dataset, created by crawling the web for images without usage rights. Getty Images is suing Stability AI in UK courts this summer, but this isn’t the forum to discuss copyright issues and I am not a lawyer, so a reasonable question to ask is, how is this any different from a designer keeping a Pinterest board full of mood images and using them as inspiration?

Both as a student and as professional designer in an OEM studio, I deliberately made a point of not using images of other people’s cars on my mood boards. This wasn’t for copyright reasons, but because I didn’t want them to influence my work. I might (and in fact do) keep Pinterest boards of interesting vehicle sketches and renders I like, but I use these to learn and understand how they are done, and in some cases because I like the combination of colors, background and style and want to apply them to one of my own renders. I’m not copying the actual design. A good and conscientious designer might find a product detail they like and then reformat and transpose that idea into their own work. For instance, the interior of my hot rod had seats made of patches of rough black leather, bound together with big white stitches. Of course the inspiration for this came from Michelle Pfieffer’s Catwoman costume in Batman Returns. I was imspired by very specific aesthetic that was personal to me, subtly altered and adapted it and used it in a unique way on a different object. This is something only a human designer can do. If my interior images and ideas were scraped into the data set Vizcom uses, they would just end up as a tiny part of visual slurry pipes onto someone elses sketches. All the individuality, meaning and nuance would be lost in the mix of pixels. Vizcom cannot differentiate because it only looks at images, not the meaning and context behind them.

I can make Photoshop give me exactly what I want because I spent years learning the software and techniques required. I can change details and proportions of a render with a few flicks of the wrist across a Wacom tablet. I can illustrate an accurate bodyside because I have painstakingly made and saved my own set of brushes that I use to make highlights and shadows. I have actions set up to make creating wheels easier. Trying to short cut all of this with AI feels like a solution in search of a problem and not a particularly helpful one. Anything you create with Vizcom is going to need fixing in Photoshop anyway.

So you might as well put in the hard yards and learn how to render because like any digital tool Vizcom is not doing the creative work for you.

I have very limited experience with AI, beyond poking at the image generator that’s now built into Paint in Windows 11. I do think it will eventually have its uses in some fields, particularly in some branches of science and medicine, and apparently it’s going to create some interesting jobs (yay

Three Mile IslandCrane Clean Energy Center!). The use of AI as an image generator is increasingly irritating for me though. Yes, AI has gotten better, but it’s not *that* great, and people keep falling for it! Take the recent social media trend of creating an AI image of an action figure with accessories…they’re cute, but certain inconsistencies with lighting, depth, etc. make it painfully obvious to be that they’re not real. Yet that doesn’t stop people flooding the comment section demanding to know where they can buy one.Poor Luddites, people don’t understand them, if any one interested in reading some history i recommend a book: Blood in The Machine, Brian Merchant.

That sounds very much like my sort of book, cheers.

With respect to your work and years of experience, there’s an often overlooked subset of people like myself who enjoy using Vizcom and Midjourney as a way of getting our ideas on to “paper” because our skills simply aren’t good enough. We want to be talented, we want to be able to show the world our ideas and visions but we can’t. It is INCREDIBLY frustrating. I see “real” artist harping on AI all the time because they feel offended by it, and I get it, but they have the abilities and the skills I don’t, and it’s not like I was going to hire anyone for this anyway because I’m too broke and I want to be able to do it myself. In high school all I did was draw. I drew a lot of concept cars and was kind of obsessed with them. I wanted to be a car designer and even naively dreamed of one day having my own company lol. I loved industrial design, still do, and studied for a bit, and while I had some talent, I saw that it wasn’t good enough compared to people like yourself. I saw how competitive it was and figured I couldn’t hack it. I still do my best to design things but I can’t always get what’s in my head on paper, and for decades I fantasized about having a machine that could just see what’s in my mind and put it out into the real world for other people to see. If I could just get it out there then people might understand lol, cause that’s really all I wanted, to be able to show the world my ideas, even if they weren’t all that great, because having an idea stuck in your mind and being unable to express it hurts. So finally, we have something that can do that to some degree, and all I see are real designers crapping all over it. I love it because I can take a crappy sketch that I’m having a difficult time visualizing and see it the way it should be. The first time I did it, it felt amazing. I almost cried lol. I was a bit disappointed, in myself, because I still wanted to be able to do it myself, to have the skill you guys take for granted, even after practicing for years. Seeing a machine do it isn’t nearly as fullfiling as it would be to do it myself, but it helps me practice too, it allows me to see the shading work I need to do to accomplish what I want with the design, even if it isn’t 100 percent accurate, I can then take it from there and make it look the way it should. It’s invaluable to someone like me, quite frankly, and I guess it just kind of offends me when people with talent and success who got to live one of my dreams dog on it, because it helps me out immensely with visualizing. If I could have been a talented concept artist and car designer I would have been, but for now this will just have to suffice. If it helps me communicate my ideas and see them in front of me I don’t care what the tool is.

Sorry I missed this comment before and I think you deserve an answer.

First of all, I do understand. I was a (mature) student for six years, and from time to time I do teach car design at masters level. So I have a lot of experience with both sides of the desk.

I think the first thing I want to say is, if you just want to muck around visualizing your sketches for yourself and bringing your designs to life for your own creative fulfillment, there is nothing really wrong with using Vizcom for that, although it’s not particularly cheap at $40 per month.

The issue is as I tried to explain is it isn’t really helping you in the long term. To a layman the results it gives you might appear impressive but a professional will spot the errors straight away.

You are right any kind of design sketching and rendering IS hard. Doesn’t matter if it is industrial design, fashion sketching, rendering cars. It took me sketching and using Photoshop almost constantly every for SIX years to get good at it, and like everything there is always someone better. I was never the best at it but I eventually acheived a professional standard. The harsh truth of the matter is not everyone can do it to the required standard (although I do beleive that everyone is capable of at least mastering some basic drawing skills). That being said, there are an abundance of online tutorials, free and paid as well as books that can help. It’s tough and I understand that, but it is an extremely competitive field. There number of kids who WANT to be car designers far outnumbers the number who are CAPABLE of being car designers, and the number of jobs available is even smaller than that. And that’s before we get into other issues like education, location and even down to what passport you have. It’s an uncomfortable truth and life lesson to learn this. Merely having the desire and even good ideas is not enough. You have to be the complete package – have a good idea, bring that idea to life through visual media, convincingly sell your idea and then usher it through to physical form. You have to be a good fit for the studio environment, have a very broad understanding of all kinds of cars, the market, history, branding, modeling and customer behaviour. It’s a lot, and only the very best succeed.

It might sound like I’m being down on people who I think are not good enough – I deal with this issue with students all the time. It’s about getting across to readers a better understanding of what car designers actually do. I’ve said it before but the sketching and rendering is the rock and roll part that gets all the attention, but the reality is it forms only a small part of a working designers life.

The only job I wanted to do more than design cars was fly a plane. I know now as an adult that was never realistic (my background), or even physically possible for me (I’m autistic and have Dyspraxia). Does that hurt? Yeah a bit. Possibly the reason I succeeded in my second choice was because I did it as an adult and took my education seriously, and I was mature enough to understand everything – both said and unsaid, that was required.

You’re using it wrong; best bet is to take your sketch, and do volume shading and highlights, then use vizcom to ‘pimp your ride’.

That said…. Fuck AI. This shit is destroying the design community, and the entire industrial design community is cooked, they just don’t know it yet. I have 23 patents and almost 2 decades of products with a pretty dang good portfolio (https://www.gruvdesign.com) and I can’t find SHIT in terms of jobs right now. Every company is scaling back due to tariffs, but also because they need fewer designers now, and on top of that, you just know some of the no talent ass clowns in marketing are just going to go on to these AI tools and type “give me a sexy toaster that looks powerful” and get 500 concepts to pick and choose from. They can then take their favorite sketch to gain ‘key alignment from stakeholders’ and pass it off to engineering and let them make it real.

I’ve always embraced technology, but I never thought my decades of skills of sketching, rendering, and design experience would be so undervalued and bypassed.

And I hate to say it, but the common argument is “yeah it can make pretty pictures but it won’t necessarily know how to make things manufacturable or ergonomic, etc”

The reality is the key decision makers are often out of touch boomers who don’t know wtf they are doing, and ‘sexy renderings with dramatic lighting’ are often chosen over superior designs, because humans are inherently emotional.

So anyway…. if anyone needs an ID guy with 20+ years of experience in powersports, medical, industrial, and consumer goods, with 20+ patents let me know.

Wasn’t ID already cooked before AI? I kept considering going back to finish studying but everyone online made it sound like all the good jobs were being outsourced and most people were moving to UX.

I mean, I never found problems finding work. Even in 2009.

Also, as someone in film, I sympathize.

This is the problem with AI, it can produce stuff that looks ok to a lay person, but if you use it in an area that you have experience in, you realise that sometimes it gets stuff completely wrong. The problem is, if you’re not already proficient in that task, it’s not always obvious that it’s wrong, or what part is wrong.

Yeah, wait til you find out the key decision makers in most companies are boomers with out of touch opinions that choose concepts based on dramatic lighting over anything else.

What you’re describing is what a friend of mine called “slop” in current AI use at Python this past weekend. It’s a huge problem and one that needs solving. AI *is* able to assist artists in specific ways, but it depends on how and why we use it. It’s such a powerful and good tool in many ways and it’s being completely mishandled by tech bros atm.

https://youtu.be/Nd0dNVM788U fwiw

And to echo you, AI cannot be a substitute for the work. It can be a tool on the palette, though, used in ways that adjunct creativity, not as a substitute for it.

Yeah, but too many people are already replacing work with slop because they don’t share this view.

It’s true, @Aaronaut.

I think the thing that’s hard is that I don’t think any of us commenting here are being irrational; I think that many are conscientiously and rightfully scared of new technology that has been frankly harnessed by the less savory parts of capitalism, and lazy (and angry!) people. Especially those of us who are creative, and see the threat that these uses of AI pose to our livelihoods.

But the problem with us *denying* their use or saying fuck AI in general is that the “they” in this case gets to run the machine. I don’t want to get political or polemical, but there’s starting to be a dividing line there. The more people with good intentions engage *with* AI, as my friend sees it, the less “slop” it CAN put out.

For instance, I use ChatGPT to occasionally proofread articles I’m writing, or for help with structuring certain artistic formats, because it can pull from very specific things. I ask it if my arguments are cogent, and it can point out where I’ve lost my track. That is useful, and that’s about as much direct help as I want from an LLM.

My friend in the linked talk above is using it to manage the backend of organizing and writing a novel, for instance, outlining and organizing the folders with her basic ideas, the kind of stuff many writers might use a cork board and index cards for. But if my friend were attempt to do that physical labor—she has fibro—that would knock her out for days. For her, it’s truly a assistive device. Very few arguments against AI – or for their use- take into account ableism or disability rights, and how AI can help!

So: People *could* be using AI as agents to organize their files or to automate certain pieces of their system, but they’re being lazy. It should not be the first stop; it should be an assistant. I don’t ask it to write things for me. Believe me, I’ve tried just for fun, and it can’t do that. It can give me structures, it can give me potential outlines, I can even give me some ideas based on something that I’ve thrown in there, but it really is garbage in/ garbage out.

It’s not a replacement for humans and cannot be, and that is the mistake these tech companies are making. Eventually, their systems are going to crash, because at a certain point, the slop takes over, as Adrian has demonstrated. Think about that on a larger scale. For instance, I live in the San Francisco Bay Area and every single ad on the San Francisco side of the water, in the techbro side of town, is for “AI to streamline your business!” or like “imagine your business with less people!” kind of stuff. That’s the mistake. That’s unsustainable and not what LLMs are built for.

I also ask AI to talk to me like a friend—and seriously, when you do that the machine responds *differently*. AI is recursive and the more you engage, the more it will respond to your own style of communication and mirror it.

I find it fascinating, and less frightening than I used to because I started using it as an “agent” who’s like a little helpy friend rather than a thing that can generate the content that that I am seeking to make. So in that sense, I unironically (yet ironically) kind of use AI more like Clippy. (I miss Clippy!!)

That’s a great perspective, and I did appreciate your friend’s talk BTW. On the one hand, I’m waiting for the image generation to get better so it can be an actual tool instead of a time suck… but yeah, on the other I hate the down-the-line implications of how it will get used 9and how people already use it).

I was going to put in a bit about appropriate use of new tools, but I would be repeating myself from the first piece. Gravity Sketch is another one I’m utterly unconvinced by.

I can’t even imagine how hard it must be for people who are specifically designers to see this technology and the shitty things that it can do, and I just wanted to acknowledge that.

What annoys me most is people promoting it who should know better.