I was a little surprised to see all the attention around an admission in court from Tesla’s Head of Autopilot Software, Ashok Elluswamy, that a 2016 video used on Tesla’s website to promote Autopilot was faked. It’s been long known that this video was edited to remove a part where the car hit a roadside barrier, and that the whole route was pre-planned and pre-mapped, contrary to what is suggested by the video. This has been known since at least 2021, when I wrote about it. I suppose what’s different now is how it’s being openly admitted under oath, and used in a shareholder lawsuit over Elon Musk’s plan to take the company private a few years ago.

The testimony also brings up other uncomfortable realities about the Autopilot program, but I think what’s not being discussed enough is how this 2016 video really defined the path that Autopilot (and later, the so-called Full Self-Driving) would take, and how that path seems to be proving to be the wrong one.

If you haven’t seen the video, you’re not really missing all that much. It’s primarily a driver’s seat view (with cuts to other camera views) of a Model S making a drive, with some sections sped up so the whole trip could fit into the 3:45 duration of the video. The Rolling Stones’ Paint It Black plays in the background, and eventually, the Tesla parks itself at Tesla’s offices all by itself. It’s impressive looking, no question! But I think the most significant part of the video is at the beginning when this text is displayed:

The text “THE PERSON IN THE DRIVER’S SEAT IS ONLY THERE FOR LEAGAL REASONS. HE IS NOT DOING ANYTHING. THE CAR IS DRIVING ITSELF.” is displayed, and these three sentences I think represent the core of all of Tesla’s missteps with Autopilot and FSD from that moment on to the present.

This text became sort of popular for independent Tesla-fan YouTubers to replicate in their own Autopilot videos, as you can see here:

The text isn’t ambiguous at all. It says, very clearly that the human in the car is “not doing anything,” and were it not for the cruel killjoy of a legal system, would not even need to be present in the first place. And, of course, it states, unquestionably, that the car is “driving itself.”

In Elluswamy’s testimony, he made clear a number of details about this video, including, according to the Reuters story,

To create the video, the Tesla used 3D mapping on a predetermined route from a house in Menlo Park, California, to Tesla’s then-headquarters in Palo Alto, he said.

Drivers intervened to take control in test runs, he said. When trying to show the Model X could park itself with no driver, a test car crashed into a fence in Tesla’s parking lot, he said.

“The intent of the video was not to accurately portray what was available for customers in 2016. It was to portray what was possible to build into the system,” Elluswamy said, according to a transcript of his testimony seen by Reuters.

Of course, this is all a huge deal because Autopilot, and later FSD, are not systems where the car is ever completely driving itself, and where the human should ever be doing nothing. They’re Level 2 systems. They require a human being in the driver’s seat, alert and aware and ready to take control with minimal or even no warning. I’ve discussed the inherent flaws of Level 2 systems here multiple times before, and portraying a Level 2 system as something that is more capable than it is, with no disclaimers at all, only adds to the already existing problems of how humans interact with and over-trust these systems, which is at the root of what goes so wrong when Level 2 systems fail.

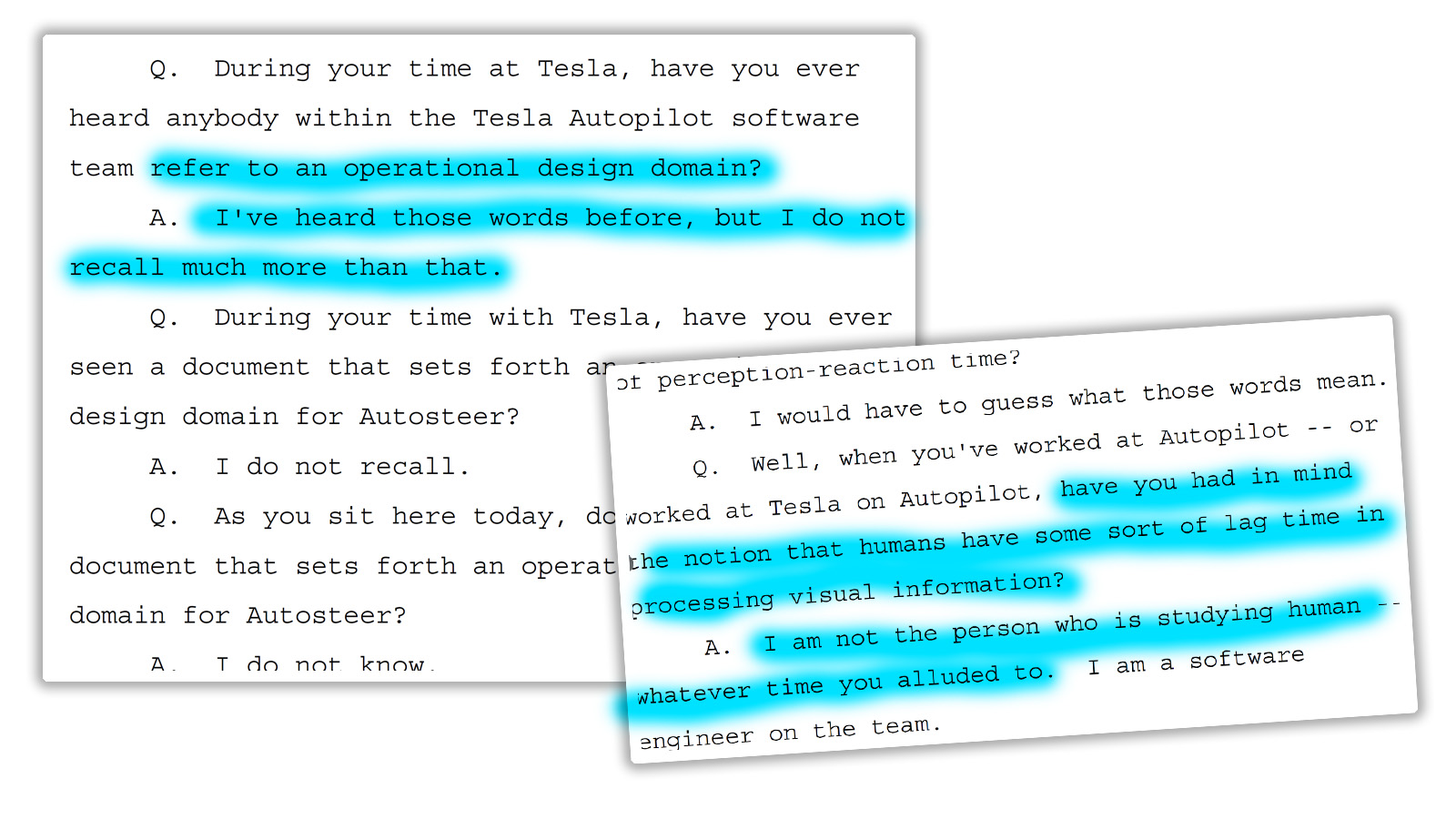

Other parts of Elluswamy’s testimony brought up more concerns, like how he apparently doesn’t understand some very basic autonomous driving concepts, like Operational Design Domain, which just means the expected conditions of the environment one expects an automated vehicle to operate within. These issues were noted in a series of tweets by automated vehicle engineer Mahmood Hikmet:

I don't know if I can put into words how terrible this is, but I'll try.

This quote is from a deposition of Ashok Elluswamy, Tesla's Head of Autopilot Software relating to the 2018 fatal Autopilot crash of Walter Huang.

He doesnt know what an Operational Design Domain (ODD) is. pic.twitter.com/SqeE7xp2m4

— Mahmood Hikmet (@MoodyHikmet) January 15, 2023

Hikmet finds multiple examples in the testimony where some basic autonomous vehicle design and operational concepts like perception-reaction time are said to be unfamiliar:

I honestly don’t know how to process this. It just doesn’t seem possible that someone in charge of Autopilot wouldn’t at least be aware of what these concepts are. They’re really ground-floor kind of ideas: what conditions the system can operate in and how long they could expect handover to a human driver may take. This is day one stuff. I’m genuinely baffled.

Now, all of this is important to note, but it’s also tangential to the main point here, the conclusion that all this renewed scrutiny on that 2016 video has brought me to. My conclusion is that this video represents a way of thinking that has doomed Tesla’s entire automated driving program, and, I think, needlessly, because so much of the underlying technology is actually genuinely impressive, and has massive potential to do good.

You can think of it like this. Once Tesla decided to develop Autopilot and FSD, it had two paths they could choose to go down: one path would be to use the technology as something that works as a backup to the human driver, a pair of AI hands hovering over the drivers, invisibly, ready to leap into action when it becomes clear that the driver has done something that could cause harm.

In this approach, Autopilot would have become a safety feature, pretty much exclusively. There would never be an expectation that a driver is not paying attention and, you know, driving, but if fatigue or poor conditions or distractions or having to pee or whatever caused the driver to make a poor decision, Autopilot would be there, always ready and alert, to take over and help correct potentially fatal mistakes.

There is actually one company I know to be taking this approach: Volvo, with their partnership with Luminar. (I have an extensive interview with the Luminar CEO about this very subject that I really need to get published here soon. I’ll get on that.)

Of course, this method isn’t very sexy. It doesn’t suggest you can relax and not really pay attention, it doesn’t hint that you don’t really need to watch the road, that maybe you could check out your phone or play a game or even ride in the back seat, because, hey, really, the car is driving.

The other method, the one Tesla has chosen and still sticks to, is what I just described up there. It’s Autopilot as we know it, where the human is secondary, monitoring the AI, waiting to see if they need to take control—something humans are terrible at. It’s the sexier approach that, while legally saying all the right things about paying attention and being ready to take over, still implies that you’re living in the future, in a car that drives you around like a robot chauffeur.

That 2016 video, with its misleading opening text and (as we now know) fundamentally disingenuous nature, came to define Tesla’s Autopilot path, and that path was one that played up the “this car will drive for you” and “this car will be a robotaxi that will make you money” angles instead of the safety angles.

Sure, they tout alleged safety advantages plenty, but had they made Autopilot something that worked with a driver instead of a half-ass replacement for a driver, they would have had something with unquestionable safety advantages. They could have leaned into that. This isn’t the 1950s anymore; safety does sell cars. Had Tesla taken this approach, Autopilot’s considerable merits could have been appreciated without caveats, because its deficiencies and potential for misuse simply wouldn’t be such an ever-present factor.

Autopilot is technically very impressive. It does some amazing things, and when you look back at how far this has all come since Stanley the VW Touareg won the DARPA Grand Challenge in 2005, it’s really quite staggering. And that’s what makes all of this so frustrating: The tech works in many contexts, but Tesla’s entire marketing of Autopilot and now FSD has always been about pushing expectations of it into places it’s just not suited for.

Is this all Musk’s decision? Or a collective decision from Tesla’s marketing teams? I really don’t know for sure, but I do know that Musk has consistently pushed people’s expectations of what Autopilot/FSD are capable of, with tweets like these:

This is why it’s possible for Tesla to have a million robotaxis by end of 2020 if we upgrade existing HW2 fleet of ~500k & make at least ~500k FSD cars

— Elon Musk (@elonmusk) July 8, 2019

Agreed. That’s approximately date when we expect Enhanced Summon to be in wide release. It will be magical. Lot of hard work by Autopilot team.

— Elon Musk (@elonmusk) July 16, 2019

Or when he said nailing Full Self-Driving is “really the difference between Tesla being worth a lot of money or worth basically zero” last year.

There are many more examples, of course. But it’s clear that Tesla’s loudest, best-known voice has consistently suggested that the automated features of Teslas are much better than reality, and, again, we can see this from that first frame of that 2016 video. And that video pushed the rest of the auto industry and its associated tech startups to dump $100 billion into this field without much to show for it beyond a few robotoaxi services here and there and glorified cruise control.

So, with everyone talking about this video again, I just want to frame it for what it really is: a manifesto of how Tesla would approach their semi-automated driving systems. It’s like a historical artifact now, a record of a choice made at a crossroads. I think as time goes on, it’ll become more and more clear that it’s also a symbol of the wrong path taken.

Autopilot could have been a true safety revolution, instead of good tech being presented in a messy and borderline negligent way.

I am baffled by the use of “little or no warning”. Isn’t the whole point of a level 2 system that warning the driver is _not_ required, because the driver needs to monitor the situation at all times _themselves_ and need to make the call that the autopilot is doing something it should not do _themselves_, even if the autopilot is fully “confident” it is doing the right thing? Like when the autopilot is “confidently” turning the car into the path of an oncoming tram, and/or into a one-way street the wrong way?

If I was an astronaut, I’d be looking for the manual override if asked to fly on a SpaceX Falcon. And if I was aboard the ISS, I’d be really nervous during autodocking with a Falcon. No telling where that thing might park itself.

Once again Jason, thanks for trying to put the Level 2/FSD issues into context – sadly I suspect you’ll get another dose of hate mail, but the points you’re making are important and need to be made.

Quelle surprise: Volvo leads with safety over sexy. I mean I would rather live through an accident than cause one by =ucking in the back seat with my GF

https://www.sae.org/blog/sae-j3016-update

Levels 0 through 2: “You ARE driving”

Adaptive cruise, lane keeping, emergency braking and so on are all there to help you, the driver, who is driving. Did I mention the driver is supposed to be driving with Level 2 systems…?

The problem has always been Tesla (and others) pretending that their Level 2 systems were capable of so much more and they were just labeling them Level 2 on some legal technicality. That’s right, Joe Consumer, we’re just saying you should keep your hands on the wheel because of litigation, but you can totally take your hands off the wheel and pretend our system is Level 3 or even higher. Go ahead, stick that water bottle in the steering wheel and watch videos on your phone. Everything will be fine and we’ll get tons of data on our beta s/w. Who cares if you crash because we’ll still get good data (unless it’s so bad the transmission stops). Of course, when you do crash, we’ll blame you because you should have been driving. It’s in the beta agreement after all. I’m sure you read it…

Maybe this isn’t the point, but I watched part of the linked drive video (which a Tesla fan was proud enough of to set to music and publish) and it was horrifying. The car repeatedly stops well short of where it should in traffic. It repeatedly passes way too close to pedestrians and cyclists. Great job on an empty road, I guess. No one would accept this while teaching someone to drive. This thing hasn’t got the skills to pass a driver’s license test, and therefore shouldn’t be allowed to operate a car.

Someone over on Ars posted a link to this that I hadn’t seen before: https://www.youtube.com/watch?v=77cbSy-rBEQ

This guy is covering up sensors and seeing how well the FSD handled it. As if FSD wasn’t horrifying enough… It’s incredible just how much he can cover up and the car will still let him use FSD. At one point it fails to start, apparently due to the obstructed sensors, but then he tries again and it works.

This might sound odd but i’m actually going to defend Ashok on one small point.

Not Knowing what ODD is doesnt intrinsically make him a bad developer.Some people -and i know this as i’m one of them- fundamentally understand the concepts even if we’ve never heard them spelled out.

Anyways it’s just a small point.Should he be working in such a field,where safety is everything?

I’d say hell no.There’s too much risk of missunderstandings

And once again i wonder how long before Tesla drops the pretense over FSD?And what happens when they do? The company’s rep will take a big hit, but would it affect ongoing operations much?

One has to think Musk will be pushed out eventually,and that would be a good time for it. Blame him, then bring out some refreshed designs to make everyone forget the weird past.

But the head of autopilot software should know the common nomenclature and damn sure should have seen some documents. But the part that concerned me most was that he did not recall any conversation around human visual processing lag. You know, the thing you have to account for if you expect a human to take over driving responsibilities.

As to Tesla dropping the bullshit, I think that would be the smart move if they can push Musk out. Start referring to things as driving assistance or something, stop promising robotaxis, and update the cars. The brand is still the first thing people think of for electric cars, so they could pivot and probably do well.

Yeah, the sooner Tesla drops the bullshit, the better for them. Musk’s popularity seems to be fading, and since he’s so closely affiliated with Tesla, it’s hurting them. Between that, and intense competition from nearly every automaker, Tesla might have a rough road ahead. It doesn’t help that they haven’t had a new model in years. Which was fine when they were the only game in town, but not so fine with the amount of competition that’s coming to the EV space. The Cybertruck is still supposedly coming, but I highly doubt that it will ever be a volume seller. Especially because it looks like nearly every other automaker with electric truck concepts will have theirs on the road first.

Sometimes it is hard to pull back, though. What about all the Stans with their FSD, they’ll go mental. It’s like Barnum & Bailey’s if they took away the hyperbole.

I was recently reminded of my love of the Hitchhiker’s Guide to the Galaxy and Douglas Adams’s thought processes. Then, last night, dog-walking on a rough, twisty, country road with no road markings under a starry sky, I got to thinking about the level of intelligence and awareness an autonomous vehicle would need to navigate the same road. With continuing advances in machine intelligence, maybe it is inevitable that one day, very likely not in our lifetimes, humans can make machines that can cope with such real driving problems.

That thought (and maybe the starry sky, including Sirius) brought to mind the elevators made by Sirius Cybernetics Corporation, which are so intelligent they can see slightly into the future to predict the floors where passengers will be waiting. However, being doomed to going up and down in elevator shafts, they develop mental problems and sulk in the basement. If vehicles brainy enough to cope with all possible driving environments are ever made, will they really be happy to be at the beck and call of humans, and spending most of their time sitting motionless in parking places?

I think this is relevant, too, about Sirius Cybernetics Corporation products: “…their fundamental design flaws are completely hidden by their superficial design flaws.”

I wish that wasn’t applicable to several companies I’ve worked for.

I would rather be a Kia hamster than a Tesla Guinea pig.

The article mentions to aid when you have to pee.

Does autopilot empty the Gatorade bottle for you then place it where needed?

Many good points made. Note, though, that of the “two paths” mentioned in this article that Tesla could have gone down, Tesla didn’t just choose one and not the other. It went down both. It uses the technology to continually scan for dangers and implement “active safety features” that include:

Forward collision detection

Green light chime

Speed limit warning

Automatic emergency braking

Multi-collision braking

Lane departure warning

Blind spot collision warning

Obstacle aware acceleration

It’s all detailed on their website.

Oh, so the tunnel video last week was the “multi-collision” braking safety feature. On point.

I opted not to make that post snarkastic, assuming any replies would handle that.

Meh… don’t really care. All these self-driving systems from all manufacturers are of no interest to me until they get good enough where I can *legally* take a nap in the back seat while the vehicle drives me to my destination.

And a *staged* promo video isn’t quite the same thing as a *faked* test.

And here’s the thing… anyone who knows anything about the term ‘Autopilot’ as it’s used in Aviation would know that using Autopilot does not mean the pilot can stop paying attention.

And Tesla makes it clear that their system requires that the driver has to continue to pay attention.

It is useful tech to at least some drivers even though I personally think it isn’t worth the cost.

Ok Stan, can you explain to us the difference between faked and staged in this specific case ?

Faked would include some sort of text indicating that the tech is ready to go and that the driver is only there for liability purposes, whereas staged would indicate that this is projected future use and clearly warn people that it is not currently capable of what is being advertised as possible later.

This is faked.

And Tesla makes it clear that their system requires that the driver has to continue to pay attention.

Tesla makes it clear to who that the system requires that? (derailing for a second, is that an example of when I should use “whom”? I’ve never understood that particular rule.)

Fairly sure that the courts have taken the stance that many standardized contracts (such as EULAs) are too unwieldy and dense for the average consumer to fully understand.

This is backed up by the average consumer including examples of customers sleeping in, or having sex in Teslas as they drive down the road.

I agree with you on the lack of clarity around attentiveness.

As to the who/whom question, an easy trick is to throw he/him in its place. “Tesla makes it clear to he” vs “Tesla makes it clear to him.” If it is “him,” it’s “whom.” There are, of course, some situations where nothing is going to sound right, but this probably covers 90% of uses. That said, common practice is really making “who” acceptable in most usage, anyway, and it’s not like using the wrong one sacrifices any clarity.

Sweet. That’s easy as hell to remember. Thanks, man.

This is true, “faked” would be including false data, or displaying functions that are not real. Staged would be showing what a prototype or completed unit is expected to be capable of, but isn’t or nor is it claimed to be. In context, this is faked and staged, “staged” because it was meant to display the intended ability of the Tesla, faked in that they never said it was staged, and it used technology not intended for the final version.

What anyone knows about autopilot is immaterial, unless Tesla was intending to only sell the cars to those in the airline industry. I would wager outside the airline industry, the concept of “autopilot” is accepted as “performing the task without thinking or paying attention” in fact, that’s the definition when used as an idiom. I would also wager few can differentiate between autopilot and FSD. We need to remember (as Jason is regularly pointing out) the connotation being presented by these car companies, is way more powerful than the denotation.

In the Buick ad showing the Enclave parallel parking, 0.01% of people see that as “Whoh you need to be ready because if you’re not, 2 tons of Buick will destroy the cars you’re parking between” no, the 99.99 see/hear “It does it for me, I can be gathering my things from the passenger seat, checking my phone, the Buick has got this” In addition we’re talking about average drivers, a majority of whom don’t pay enough attention when driving regular cars.

I have no interest in the technology either but I care immensely, so should you, that is if you, or people you like drive. Tesla playing fast and loose with testing and messaging, when paired with the cars not being ready for the driving being asked by them, this is frightening.

It’s unbelievable to me that on the one hand we have so many safety regulations defining the physical attributes of automobiles but, it would appear, NOTHING that is preventing Tesla from using the entire planet as a real-world beta test of software that we have ample evidence is capable of not just error, but causing real harm.

Jason, you are writing some of the most insightful pieces on this subject without diving into the depths of software and sensors and fearlessly facing off against the Teslerati. Keep it up. As a traffic safety engineer, I am deeply concerned about the implications of the marketing, driver messaging, and use of these systems. They are being used to sell cars without yet having much accountability. When car company marketing departments get to control the product, you get things like the Uber fatal crash and FSD.

I honestly don’t know how to process this. It just doesn’t seem possible that someone in charge of Autopilot wouldn’t at least be aware of what these concepts are.

I’m not really surprised by this. Maybe surprised that he’d admit everything in court and not try to waffle around getting himself implicated in this whole mess?

As to why I’m not surprised, remember in ’18 when Tesla stopped doing that brake test (or was it steering?) on one of their models? This basic test that every manufacturer puts their vehicles through before they leave the factory and Tesla just stopped in order to save a few minutes per vehicle so they could eke out a few more per month?

Yeah, that’s when I stopped being surprised by anything Tesla did in the name of cutting corners.

The “ODD thing” is absolutely indicative of a complete lack of competence.

Imagine you go see a cardiac surgeon and they’re like “Myocardial Infarction? I’m familiar with the words, but not much more than that. It’s some kind of heart thing, right?”

If you have no grasp of the fundamental technical language of your field, whatever it may be, your happy ass should not be employed in that field, much less placed in a position of authority.

Welp, this was supposed to be a reply to Glenn Stephens’ comment down below, but here we are. Woops!

Thats the problem Tesla is trying to solve a car problem like it was a computer problem using computer people not car people.

They’re not computer people, either. They’re tech/business bros.

This is what happens when an ‘engineer’ who is not an engineer hires another ‘engineer’ who is not an engineer.

If either Musk or Elluswamy were real engineers they’d be up on professional misconduct charges.

It’s not like they rolled the thing down a hill and claimed it worked !! oh wait it pretty much is

I can’t fucking understand why elon is sued to oblivion…

*is not* safly

You said something not nice about Elon. Prepare for the fire and pitchforks to arrive.

https://twitter.com/elonmusk/status/1387901003664699392

This is an overlooked Musk tweet admitting that without real AI, not the fake stuff we have now, his method of using cameras alone will not work. And no one can predict when we will have real AI.

That’s what AI wants you to think

Next thing you will tell me is the Tesla Bot wasn’t real in his first demo.

I still wonder who the human is that’s trapped inside the Tesla Bot, destined to be Elon’s metallic slave forever. That would explain why it shed a single tear onstage at that demo.

Forever? He’s probably already been laid off and gotten a job at General Dynamics.

If I never hear or read the word “robotaxi” again in my life, it will be too soon. Much like Musk’s promise to have a man on mars within 10 years (quote from 2011, then repeated again in 2021), everything is just a year or two away.

“Full Self Driving” is, and always has been, a bare-faced lie, which was obvious to even a casual observer who chose to examine it for a few moments. The only question is whether Elon and Co. will have to pay the price for the damage (both physical and philosophical) that this lie has done to people’s lives and to the automotive industry.

Kudos to you for calling it out and presenting the situation in a fair manner, Jason.

Also, Robot Take the Wheel is a fantastic book!

The ODD thing is a gotcha. The guy may not be aware of the term “Operational Design Domain” but still understand the very basic engineering concept of designing a system for the environment in which it will operate and the users who will operate it.

As for the admission that the video was faked. That is horrendous and opens up Elon and Co. to a whole new range of fraud lawsuits. It is perfectly legal to be overly optimistic about your company’s prospects but when you start producing fake evidence that you have achieved it, you have created evidence that you know it does not work as portrayed.

One might say that ElonCo isn’t “half-assing” the research behind their budiness development, they are “Theran-assing” it.

*business. Edit please! 😉

It’s okay , most of us speak fluent typo .

Sure, but I would expect Tesla’s Head of Autopilot Software to be aware of Operational Design Domain, though. And to have seen documents surrounding same. He also said he did not recall any discussions that acknowledged humans may have a lag time processing visual information. Which is really important when you are expecting a human to take over at minimal notice, since you need to acknowledge how much notice is required. Sure, he doesn’t need to be aware of every conversation, but it’s a pretty major thing that should have crossed his desk.

Everybody knows people’s reactions have a lag. It’s explicitly mentioned in driver’s ed to explain why you leave space between you and the car in front of you (and how much).

This Ashok fellow is either an idiot or a liar.

He could be both. I suspect that the visual processing lag came up, but people were convinced that they’d solve the self-driving problem completely enough to ignore the human. So they didn’t try to account for it, but that sounds particularly damning when you put something out there that requires human intervention.

I’m guessing he knew there had been conversations that brushed aside the concerns but thought that there would be no documentation of them and/or that he could say those conversations were outside his purview. Which is wild, considering that he is the head of autopilot software and human response times should be an important bit of information to accommodate in programming.